It’s not in the form of a single, clean formula but you can thrash out the Newton-Girard identities as explained here. I recently learned it is in the form of a single, "clean" formula:

$$\det(A)=(-1)^n\cdot\sum_{m_1+2m_2+3m_3+\cdots+nm_n=n\\\,\,\quad\quad\quad m_\bullet\ge0}\prod_{k=1}^n\frac{(-1)^{m_k}\cdot\operatorname{Tr}(A^k)^{m_k}}{k^{m_k}\cdot m_k!}$$

More generally, the $k$th coefficient of $\chi_A(t)=t^n+a_1t^{n-1}+\cdots+a_{n-1}t+a_n$ is given by: $$a_j=\sum_{m_1+2m_2+3m_3+\cdots+jm_j=j\\\,\,\quad\quad\quad m_\bullet\ge0}\prod_{k=1}^j\frac{(-1)^{m_k}\cdot\operatorname{Tr}(A^k)^{m_k}}{k^{m_k}\cdot m_k!}$$

This follows from a formula on that same Wikipedia page that I somehow missed on the first dozen viewings... Wikipedia doesn't provide a proof (unless I remain blind) so here's a sketch:

For a fixed set of letters $\lambda_1,\lambda_2,\cdots,\lambda_i$ let $p_k$ and $e_k$ denote the $k$th power sums $\sum\lambda_j^k$ and elementary symmetric functions respectively.

Observe $\sum_{k=1}^\infty\frac{(-1)^{k+1}}{k}p_kt^k=\sum_{j=1}^i\sum_{k=1}^\infty\frac{(-1)^{k+1}}{k}(\lambda_jt)^k=\sum_{j=1}^i\ln(1+\lambda_jt)$ for sufficiently small real $t$. Exponentiating and using $\exp(a+b)=\exp(a)\exp(b)$ we see fairly clearly that: $$\exp\left(\sum_{k=1}^\infty\frac{(-1)^{k+1}}{k}p_kt^k\right)=\sum_{k=1}^\infty(-1)^ke_kt^k$$As an equality of analytic functions for $t$ in a neighbourhood of zero. We may compare coefficients then (note $e_k=0$ for $k>i$) after expressing $\exp(\sum\cdots)=\exp(p_1t-p_2t^2/2+\cdots)=\exp(p_1t)\exp(-p_2t^2/2)\cdots=(1+p_1t+p_1^2t^2/2!+\cdots)(1-p_2t^2/2+p_2^2t^4/2\cdot2+\cdots)\cdots$ and expanding the product to find: $$(-1)^je_j=\sum_{m_1+2m_2+3m_3+\cdots+jm_j=j\\\,\,\quad\quad\quad m_\bullet\ge0}\prod_{k=1}^j\frac{(-1)^{m_k}\cdot p_j^{m_k}}{k^{m_k}\cdot m_k!}$$As desired. You can justify the slightly iffy "$\cdots$" and infinite product expansion by noting that to extract a coefficient we need only look up to $O(t^j)$ and take a finite expansion plus some error term of higher order. Then, provably, the higher order term - some analytic function - will not contribute to the desired coefficient. We only need to work with finite expansions.

Continue original post.

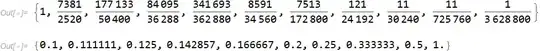

Knowledge of the first $d$ power sums of $d$ constants together with these identities allows you to deduce the symmetric polynomial values - for example, you could infer $(d=5)$ the quantity: $\lambda_1\lambda_2\lambda_3(\lambda_4+\lambda_5)+\lambda_1\lambda_2\lambda_4\lambda_5+\lambda_2\lambda_3\lambda_4\lambda_5$.

Ring a bell? These are the expressions involved in Vieta’s identities. So knowledge of all power sums - the traces - allows you to construct the coefficients of the characteristic polynomial. If you can find the roots of that polynomial to satisfactory accuracy, you can get your eigenvalues back.

A simple example with $d=2$. If you know $\operatorname{Tr}(A)=\lambda_1+\lambda_2=5$ and $\operatorname{Tr}(A^2)=\lambda_1^2+\lambda_2^2=19$ then you can deduce $\lambda_1\lambda_2=3$ and thus that the eigenvalues are roots of: $$x^2-5x+3=0$$From which you can get their exact values.

I learned this in the context of basic character theory: it showed me that studying the traces of the matrices actually gives you a surprising amount of information, more than is gained from studying the determinant. Indeed the Newton-Girard identities allow the $n\times n$ determinant of a matrix $M$ to be determined from the traces of $M,M^2,\cdots,M^n$. But knowing the determinant(s) wouldn’t tell you the trace: what if one of the eigenvalues were zero? Then the trace could be anything by letting the other eigenvalues vary, but the determinant would always be zero.