$

\newcommand\Ext{{\bigwedge}}

\newcommand\R{\mathbb R}

\newcommand\C{\mathbb C}

\newcommand\conj[1]{\overline{#1}}

\newcommand\form[1]{\langle#1\rangle}

\DeclareMathOperator\linspan{span}

\renewcommand\Re{\mathop{\mathrm{Re}}}

\newcommand\tensor\otimes

\newcommand\gtensor{\mathbin{\hat\tensor}}

$

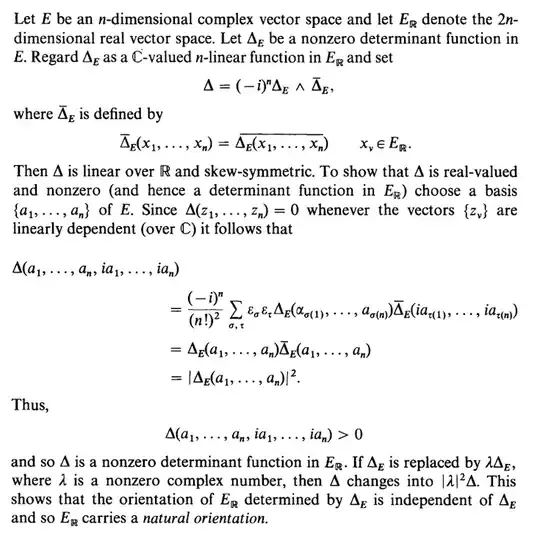

I think that $\Delta = (-i)^n\Delta_E\wedge\bar\Delta_E$ is a typo and should be

$$

\Delta

= {\color{red}{\frac1{(-i)^n}}}\Delta_E\wedge\bar\Delta_E

= i^n\Delta_E\wedge\bar\Delta_E.

$$

This is somewhat natural since (as Greub is trying to convince us)

$$

\Delta_E(ia_1, \dotsc, ia_n)\wedge\bar\Delta_E(ia_1, \dotsc, ia_n)

= i^n\Delta_E(a_1,\dotsc,a_n)\wedge\bar\Delta_E(ia_1,\dotsc,ia_n)

$$

is non-zero and real. It could also be the case that Greub meant to write

$$

\Delta(ia_1, ia_2, \dotsc, ia_n, a_1, a_2, \dotsc, a_n)

$$

in which the case the definition of $\Delta$ as written by Greub is the one required.

In either case, the argument proceeds by suggesting to the reader that $\sum_{S_{2n}} = \sum_{S_n\times S_n}$ when evaluating this particular wedge product. This doesn't seem outlandish to me, but I also don't immediately see how that works.

What I will do is give a more abstract approach, which completely avoids the tedious combinatorics. It would be a good exercise to pinpoint how this argument lines up with Greub's.

Let us consider the exterior algebra.

Choose non-zero $J \in \Ext^n E$. This induces a linear isomorphism $\cdot/J : \Ext^n E \cong \C$ via

$$

J' = (J'/J)J,\quad J' \in \Ext^n E.

$$

The notation $J'/J$ is merely meant to be suggestive. Such an isomorphism is a determinant function, and is equivalent to a choice of $J$ by choosing the unique $J'$ such that $J'/J = 1$. We will show that (given a notion of conjugation) $J$ naturally gives rise to an $I \in \Ext^{2n}E_\R$ by constructing a natural determinant function $\Delta \in \Ext^{2n}E_\R \to \R$, and we will also show that every such $I$ has the same orientation.

A conjugation on $E$ is not inherent to $E$ being a complex vector space; it is an additional structure. Once we have a conjugation map, there is a special subset of $E$

$$

\Re E := \{v + \conj v \;:\; v \in E\}

$$

whose elements we call the real elements of $E$. This is not a subspace of $E$, but clearly is a subspace of $E_\R$. By $\Ext\Re(E)$ we mean the subspace of $\Ext E_\R$ generated by $\Re(E)$. It is evident that

$$

E_\R = \Re E \oplus i\Re E,

$$

and it follows that $\Ext E = \Ext\Re(E)\gtensor\Ext i\Re(E)$ where $\gtensor$ is the graded tensor product. In particular

$$

\Ext^{2n} E_\R = \Ext^n\Re E\gtensor\Ext^n i\Re E.

$$

The graded aspect of $\gtensor$ isn't important here; when considering just linear maps, $\gtensor$ is just like the usual tensor product. By this fact, we may construct a linear map $\Ext^n E_\R \to \R$ by specifying its values on pairs $(H, H')$ for $H \in \Ext^n\Re(E)$ and $H' \in \Ext^ni\Re(E)$ and ensuring bilinearity.

By the universal property of $\Ext E_\R$, the $\R$-linear isomorphism $\phi : E_\R \to E \subseteq \Ext E$ extends uniquely to an $\R$-algebra homomorphism $\Ext E_\R \to \Ext E$. Our candidate for $\Delta$ is then

$$

H\tensor H' \mapsto \conj{\frac{\phi(H)}J}\frac{\phi(H')}J.

$$

Here, the conjugation is meant to apply to the entire expression $\phi(H)/J$. Consider though the particular case where $H' = m_i(H)$ for $m_i : \Ext E_\R \to \Ext E_\R$ the outermorphism which multiplies vectors by $i$. Then $\phi(H') = i^n\phi(H)$ and

$$

\conj{\frac{\phi(H)}J}\frac{\phi(H')}J = i^n\left|\frac{\phi(H)}J\right|^2.

$$

So we divide by $i^n$. Define $\Delta : \Ext^{2n}E_\R \to \R$ by

$$

\Delta(H\tensor H') = (-i)^n\conj{\frac{\phi(H)}J}\frac{\phi(H')}J

$$

This still gives us a real number for any $H'$ since $H' = xm_i(H)$ for some $x \in \R$ by virtue of being an element of $\Ext^ni\Re(E) = m_i\left(\Ext^n\Re(E)\right)$. Observe now that for any non-zero $a \in \C$

$$

J'/(aJ) = \frac{J'/J}a,

$$

hence if $\Delta'$ is the $E_\R$ determinant function associated to $aJ$

$$

\Delta'(H\tensor H')

= (-i)^n\conj{\frac{\phi(H)}{aJ}}\frac{\phi(H')}{aJ}

= \frac{(-i)^n}{|a|^2}\conj{\frac{\phi(H)}J}\frac{\phi(H')}J

= \frac1{|a|^2}\Delta(H\tensor H').

$$

Since $1/|a|^2$ is positive, $\Delta'$ has the same orientation as $\Delta$. Thus, as promised, every $J$ induces the same orientation on $E_\R$.

We could also consider the alternative $\Delta$

$$

\Delta(H\tensor H') = i^n\frac{\phi(H)}J\conj{\frac{\phi(H')}J}

$$

but this is in fact equivalent to the original since there is some $x \in \R$ such that $H' = xm_i(H)$ and

$$

i^n\frac{\phi(H)}J\conj{\frac{\phi(H')}J}

= \frac{\phi(m_i(H))}Jx(-i)^n\conj{\frac{\phi(H)}J}

= (-i)^n\frac{\phi(H')}J\conj{\frac{\phi(H)}J}.

$$

It is interesting to note that the same argument goes through if we replace every instance of $i$ with any $\xi \in \C\setminus\R$. Whether every choice of $\xi$ induces the same $\Delta$ as $i$ does is a question I leave alone for now.