If we have $P(a)=0.6, P(b)=0.7$, can we say they are not mutually exclusive?

Yes, because $P(a\cup b)=P(a)+P(b)-P(a\cap b)$ and since $0\leq P(a\cup b)\leq1$ so, in your case, $P(a\cap b)\neq0$ and $\bf P(a\cap b)\geq0.3$.

While generally we find ourselves checking for whether there is something common between the $2$ events to check for mutual exclusiveness but if we already know that their probabilities add up to more than $1$ then we also know they can't mutually exclusive right away.

So yes you are right when you say "cause $\bf p(a)+p(b)>1$".

If event $a$ and $b$ are totally not related, can we still add them

Nope, not until you know that the events are mutually exclusive. If the events $a$ and $b$ are not related then we say that they are independent and that does not means that $\bf P(a\cap b) =0$ (which is true for mutually exclusive events) but instead that $P(a\cap b)=P(a)\cdot P(b)$.

For example, the (b) is probability that we go to jail if we rob a bank, p(a) is the probability of jack eat an apple today, can we still say they are not mutually exclusive?

Let me instead take a more well-known example to make things more clearer.

event $\bf a$: A passes the test and, as you have said, $P(a)=0.6$

event $\bf b$: B passes the test and, as you have said, $P(a)=0.7$

This by all means, in capacity, is same as your example. A and B are different students and they give different exams and aren't related in any way.

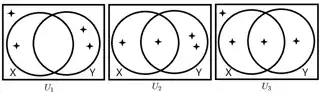

So, that said, here, $a$ and $b$ are not mutually exclusive established by the fact that their combined probabilities is more than $1$ or because as we'll see shortly that $P(a\cap b)=0.42 (\neq 0)$. But there's yet another way to establish this.

Here's another definition of mutually exclusive events to help you understand them:

If there is a set of events such that if any one of them occurs, none of the others can occur, the events are said to be mutually exclusive.

Example: In a coin toss, event of getting heads and the event of getting tails is mutually exclusive as when one occurs the other event can't occur.

So, we also now have another perspective to see why (even in your example) events $a$ and $b$ are not mutually exclusive. As happening of one does not rules out the possibility of happening of the other one.

Let's come to next thing:

In our example regarding passing of tests, we know, events $a$ and $b$ are independent by common knowledge (not mathematical but common sense) so the $P(a\cap b)=P(a)\cdot P(b)=0.42$.

Note: By definition, when $2$ events are independent then then $P(a\cap b)=P(a)\cdot P(b)$.

In your example too it seems quite intuitive enough that events are independent so we'll consider/assume them that way.

but do not understand the jail probability p(b) is dependent on the rob probability, is it right that we add p(b) to p(a) to say they are not mutually exclusive?

So, they are not dependent and your intuition is correct that they are indeed independent (and not mutually exclusive).

or the example i made is totally wrong?

When the events aren't deterministic then we can always get the help of probability.

For the reasons above, hope you are no longer confused between independent and mutually exclusive events.

$$\begin{align} P(A\cup B) &= P(A) + P(B) - P(A\cap B)\ &= 1.3-P(A\cap B) \end{align}$$

The LHS $P(A\cup B) \le 1$, so $P(A\cap B)> 0$, i.e. events $A$ and $B$ are not mutually exclusive.

– peterwhy Aug 27 '22 at 00:17