In Chapter 2 of Numerical Optimization by Nocedal and Wright, the authors have written the following equation:

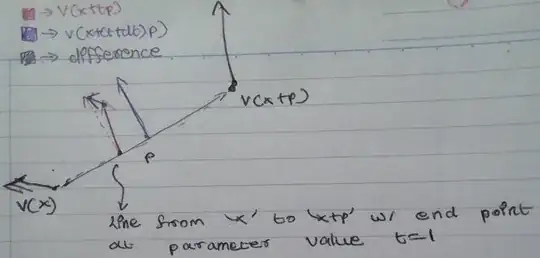

$$ \nabla f(\overrightarrow x + \overrightarrow p) = \nabla f(\overrightarrow x) + \int_0^1 \nabla^2 f(\overrightarrow x + t\overrightarrow p)\overrightarrow pdt $$

where $f:R^n \rightarrow R$ is twice continuously differentiable, and $\overrightarrow p \in R^n$.

What I think the integral means is - compute the integral element-wise for each entry in the $nx1$ column vector that results from left-multiplying the Hessian of $f$ at $(\overrightarrow x + t\overrightarrow p)$ by $\overrightarrow p$. Is this correct?

Moreover, how is this derived as a consequence from Taylor's Theorem? I have not been able to figure out how to make that jump and was struggling with the notation of integrating over a vector in this manner.