Recently, I keep coming across terms containing "Lipschitz" pertaining to statistical models and machine learning. This includes terms such as "p-lipschitz (rho), lipschitz convexity, lipschitz loss, lipschitz continuity, lipschitz condition, etc.

For example, the (famous) Gradient Descent algorithm that is used heavily in the field of machine learning to optimize the "loss functions" of Neural Networks, allegedly claims that it can provide a solution arbitrarily close to the true solution, provided an infinite number of iterations and that the "loss function" is "Lipschitz Continuous":

Although the mathematical definitions of the Lipschitz Condition are quite detailed and complicated, I think I was able to find a simple enough definition to understand for this question:

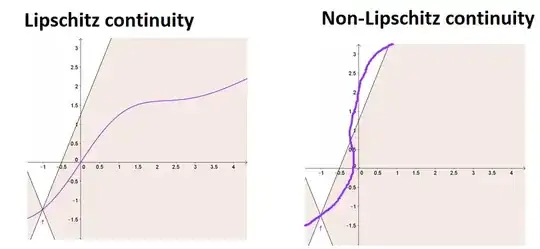

I am not sure if this is correct or not, but from an applied perspective : I have heard that the Lipschitz Condition is a desirable property for mathematical functions (and the gradients of these functions) because it suggests that "small changes in the input values of the function can not result in infinite values of the output of the same function".

My intuition is that if a function you are dealing with does not satisfy the Lipschitz Condition and can potentially grow in infinite size provided small changes in the inputs - this would suggest that this function has the ability to display very volatile, erratic and chaotic behaviors, making it fundamentally difficult unpredictable and unstable to deal with:

My Question: When it comes to complicated mathematical functions (e.g. functions that model natural phenomena in the real world), we can use the definitions of "convexity" (e.g. provided here) to find out if the function is convex or non-convex (i.e. if the function does not follow the required convexity conditions, it must be non-convex).

Can the same be said about real world functions obeying the Lipschitz Condition?

For example - if we look at the specific "loss function" being used in a specific neural network (e.g. specified number of layers, neurons, types of activation function, etc.), it might be possible to prove whether this "loss function" obeys the Lipschitz Condition.

But what about the original function that we are trying to approximate with the neural network itself?

At this point, are we just assuming that natural phenomena in the real world (e.g. ocean tides, economic patterns in the market, animal migration trajectories, etc.) are obeying the Lipschitz Condition - either in their su generis form, or for the sake of the machine learning algorithms to work?

Thanks!