A lot of proofs in linear algebra use the fact that any square matrix can be written in Jordan normal form.

Unfortunately I can't see why this is the case, I didn't get what Wikipedia said and I just can't find a proof that I understand. Can you guys please tell me what is the simplest proof of it in your opinion ?

Unfortunately I can't see why this is the case, I didn't get what Wikipedia said and I just can't find a proof that I understand. Can you guys please tell me what is the simplest proof of it in your opinion ?

- 147

-

3I don't think there is a simple proof of this. The fact that every square matrix over an algebraically closed field has a Jordan form is a nontrivial theorem, and you can see proofs in most books in linear algebra. – Mark Oct 16 '21 at 20:11

-

I feel the antidote to doubt is to work examples, ones where all the eigenvalues are integers, exercises intended for hand calculation. In particular, this means, given $A,$ we can find an integer matrix $P$ such that $P^{-1} AP = J.$ This way, the only fractions occur in $P^{-1}$ because we divide by $\det P.$ – Will Jagy Oct 16 '21 at 20:37

-

You may find https://math.stackexchange.com/questions/420965/how-does-one-obtain-the-jordan-normal-form-of-a-matrix-a-by-studying-xi-a helpful. – Rob Arthan Oct 16 '21 at 21:02

-

I keep a text file of url's for questions I enjoy, collected by topic. About two dozen on Jordan form, https://math.stackexchange.com/questions/2586921/jordan-decomposition-help-with-calculation-of-transformationmatrices and https://math.stackexchange.com/questions/2667609/problem-with-generalized-eigenvectors-in-a-3x3-matrix ........... https://math.stackexchange.com/questions/2667415/what-is-the-minimal-polynomial-of-a – Will Jagy Oct 16 '21 at 21:03

1 Answers

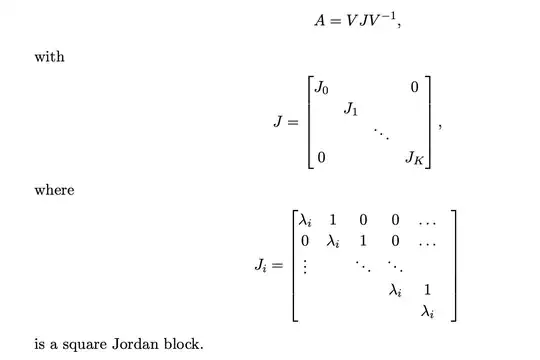

If $A$ is $n \times n$, let $f$ be the corresponding endomorphism of $V = \mathbf{C}^n$.

Then $V$ can be given a $\mathbf{C}[X]$-module structure by defining $P(X)\cdot v = P(f)(v)$.

A system of representatives for the irreducible elements of $\mathbf{C}[X]$ is given by $X - \lambda, \ \lambda \in \mathbf{C}$. By the structure theorem for fintely generated modules over a PID, $V$ is isomorphic to a finite direct sum of modules of the form $\mathbf{C}[X]/(X-\lambda)^k$. (A summand $\mathbf{C}[X]$ cannot occur because $V$ is finite-dimensional over $\mathbf{C}$.)

This expression of $V$ as a direct sum is also a direct sum of $\mathbf{C}$-vector subspaces, which will be the subspaces on which the Jordan blocks act. Moreover, since each summand is closed under multiplication by $X$, it is stable under $f$.

The only thing left to check is that $f$ acts as a Jordan block on each summand. Without loss of generality, we may assume that the summand is $\mathbf{C}[X]/(X-\lambda)^k$ and that $f$ is multiplication by $X$.

Then it is easy to check that the matrix of $f$ in the basis $((X-\lambda)^{k-1},(X - \lambda)^{k-2},\dots,X - \lambda, 1)$ is the $k \times k$ Jordan block with diagonal element $\lambda$.

Of course, all of this is close to trivial with the structure theorem, but every other proof I've seen of the existence of the Jordan decomposition amounts to rewriting a proof of the structure theorem in vector space language. So the most transparent thing is just to read a proof of the existence part of the structure theorem.

- 81