I think what you're missing is that there are two different and independent ways to describe (classically) valid propositional formulas:

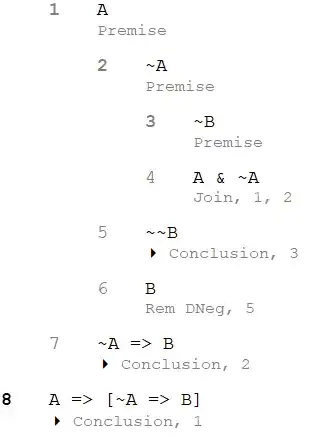

First, by defining a proof system (such as a Hilbert calculus, or natural deduction, or sequent calculus) with particular axioms and inference rules, and asking: is there a proof of such-and-such formula in the system.

Second, by using truth tables to define the truth value of formulas directly:

$$

\begin{array}{cc|c} A & B & A\land B \\ \hline F & F & F \\ F & T & F \\ T & F & F \\ T & T & T \end{array}

\qquad

\begin{array}{cc|c} A & B & A\lor B \\ \hline F & F & F \\ F & T & T \\ T & F & T \\ T & T & T \end{array}

\qquad

\begin{array}{cc|c} A & B & A\to B \\ \hline F & F & T \\ F & T & T \\ T & F & F \\ T & T & T \end{array}

\qquad

\begin{array}{c|c} A & \neg A \\ \hline F & T \\ T & F \end{array}

$$

For every formula, we can use the truth tables for the connectives to compute an overall truth value for every assignment of truth values to the propositional variables in it. There are finitely many such assignments, so we can do all of it systematically with pencil and paper. If the result is $T$ for all of them, the formula we're evaluating is valid.

You see that the second of these approaches does not involve declaring anything to be axioms -- we simply have tables that describe how each connective behaves in full detail.

The wonderful thing is now that the two descriptions lead to exactly the same formulas being "valid". That's a non-trivial claim that it typically takes at least a handful of pages in a mathematical logic textbook to prove.

This particular nice situation, where we have either a syntactic way of determining what is valid (namely proofs), or a semantic way (namely direct evaluation of truth tables) and they agree, is the happy ideal that we attempt to preserve as much as we can when we move to predicate logic (where the $=$ symbol becomes possible).

As it turns out, we cannot get it quite as nice as in the propositional case: the semantic definition of "valid" in predicate logic requires stating that such-and-such is true for a potentially infinite set of structures (whereas for a propositional formula there are always finitely many relevant truth assignments to try), which means that formal proofs take on additional importance in predicate logic: they're the way to show a formula is valid that we actually have a chance of writing down on a finite amount of paper!

In predicate logic, the word tautology is by pure convention restricted to those valid formula where we know they are valid because they are substitution instances of propositional formulas that are valid. They are not all the valid predicate-logic formulas, and they are not "more valid" than the other formulas -- they're simply a class of valid formulas that have particularly straightforward proofs, and it's considered useful to give that property a name.

Alternatively we could also have decided to use the word "tautology" about every predicate-logic formula that is always true in every structure (that is, independently of any "non-logical axioms"). I think there are even a few authors who do this, though I cannot name one offhand. It's just not the meaning most logicians use for the word.