A question in my assignment asks:

Suppose that $X$ and $Y$ are independent with a common uniform distribution over $(0,1)$. Find the probability distribution function of $X+Y$.

First I assume that I have a variable $a$ such that $a = X + Y$, and $ 0 < a <2$. I can then formulate the following:

$$f_{X+Y}(a) = \int^\infty_{-\infty} f_X(a-y)f_Y(y)dy$$

So far so good, mostly just putting in formula. Now I'm stuck. A quick reference to the answer key tells me that the above is also:

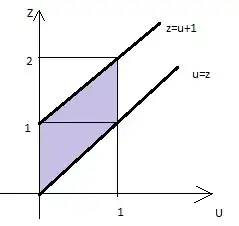

$$=\int^1_0f_X(a-y)\times1dy \\=\int^1_0f_X(a-y)dy$$

Can someone kindly explain the logic between the jump of converting the $(-\infty, \infty)$ to $(1,0)$, then assuming $f_Y(y) = 1$? How does that work, and does it have anything to do with the fact that I'm integrating w.r.t. $y$?

Thank you in advance!