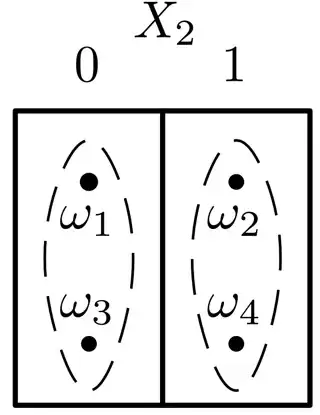

I don't know if these diagrams will be of any use to you. I find it useful to think of conditioning as putting a transparency over the sample space.

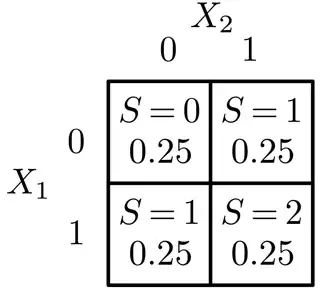

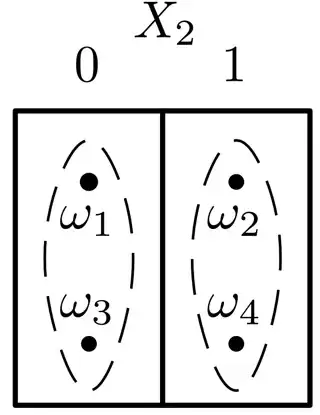

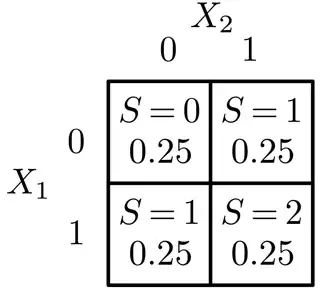

Let $X_1, X_2$ be two fair, independent coinflips. Denote the outcome of heads by $0$ and the outcome of tails by $1$. Let $S = X_1 + X_2$. The sample space $\Omega$ has four points: $\{(0,0), (0,1), (1,0), (1,1)\} = \{\omega_1, \omega_2, \omega_3, \omega_4\}$, :

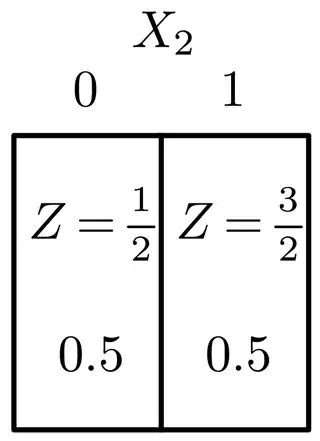

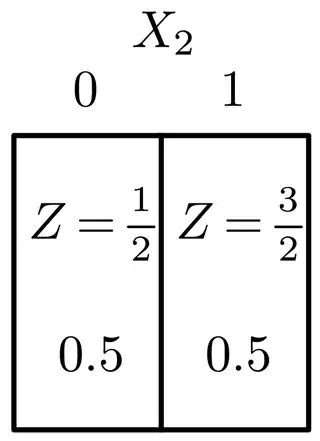

First consider the inner conditional expectation, $Z = E[S | X_2]$. Note that $Z$ is a random variable: for each $\omega \in \Omega$, $Z(\omega)$ is a real number. It's simply that $Z(\omega)$ is constant on the sets $X_2^{-1}(\{0\}) = \{(0,0), (1,0)\} = \{\omega_1, \omega_3\}$ and $X_2^{-1}(\{1\}) = \{(0,1),(1,1)\} = \{\omega_2, \omega_4\}.$ In diagram format,

What is the constant value of $Z$ when $X_2 = 0$? It is the conditional probability of $S$ given $X_2 = 0$, which is an average over the $\omega$'s in the set $\{\omega : X_2(\omega) = 0\}$:

$$E[S | X_2](\omega_1) = E[S|X_2](\omega_3) = 0.5.$$

Similarly,

$$E[S | X_2](\omega_2) = E[S|X_2](\omega_4) = 1.5.$$

Now what happens when you do $E[E[S|X_2]]$? You average again. The rule $E[E[S|X_2]] = E[S]$ can be (roughly) read as "the average of the partial averages is the full average".