Systems of equations are taught pretty early in American curriculum. We are taught methods of substitution and methods of elimination in order to solve them. We are taught how to use matrices or graphs as alternative strategies to encode/visualize them.

There are linear systems of equations...there are non-linear systems of equations...and there are systems of equations with 1 or many variables. However, to this day, I still do not really understand what systems of equations are. I've tried to find an abstract interpretation of systems of equations but without much success. I am very interested in figuring out this "systems of equations abstraction" because there are canonical statements (e.g. the classic "You need as many equations as variables to find a solution") that I would very much like to prove.

I've tried myself to come up with some semblance of an abstraction but I have not made much progress. I will illustrate the case for one equation with one variable and two equations with two variables (which is where I run in to trouble).

One Equation - One Variable

Consider the purely arbitrary equation: $$a = b +\alpha x \ \text{ where}\ \alpha \neq 0$$

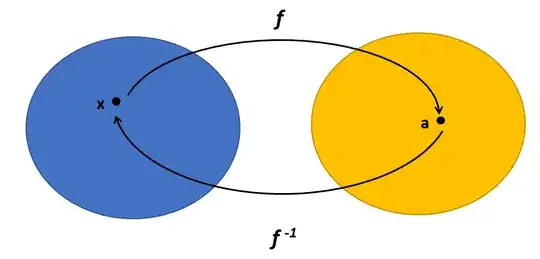

The effort to solve this equation can be rephrased as "Find me the $x$ that maps to $a$ through the function $f(x) = b + \alpha x$." In effect, therefore, this question is one requiring that we find the inverse function $f^{-1}$ that, when $a$ is given as input, $x$ will be output.

Solving for $x$ by virtue of substracting $b$ from both sides and dividing both sides by $\alpha$ effectively amounts to determining the inverse function such that: $f^{-1}(x')=\frac{x' -b}{\alpha}$...for future purposes, also note that this can be denoted as $f^{-1}\big(f(x)\big)=\frac{f(x) -b}{\alpha}$ . Plugging in $a$ for $x'$ we arrive at the solution to this equation, which is $f^{-1}(a)=\frac{a-b}{\alpha}$. So far, so good.

Two Equations - Two Variables

Consider the following two arbitrary equations of two variables:

$$a=b+\alpha x + \beta y \ \text{ where}\ \alpha, \beta \neq 0$$

$$c=d +\gamma x + \delta y \ \text{ where}\ \gamma, \delta\neq 0$$

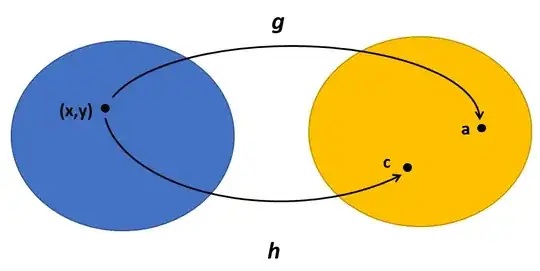

Following the same logic of the "One Equation - One Variable" section, solving for $x$ and $y$ can be viewed as constructing the inverse functions for the two above equations, which can be recast as specific instances of:

$$g\big( (x,y) \big) = b+\alpha x + \beta y$$

$$h \big ( (x,y) \big ) = d +\gamma x + \delta y$$

As to how one solves for these inverses, I have not the faintest clue. Obviously, we could revert to the standard methods of substitution and arrive at the following bulky solutions:

$$ y = \frac{\alpha \Big ( h \big((x,y)\big) -d \Big) -\gamma g\big( (x,y) \big)+\gamma b}{\delta \alpha - \beta \gamma}$$

and

$$ x = \frac{g\big( (x,y) \big) -b - \beta y}{\alpha}$$

However, these are not inverses of the functions $g$ and $h$. In fact...I don't really even know WHAT these equations represent. If you were to plug in the value of $y$ in the final equation for $x$ (I omitted that for brevity), you can see that the $y$ equation and the $x$ equation both have $g\big ( (x,y) \big)$ and $h \big ( (x,y) \big)$ in them...so these equations are inverse-like. That is to say, determining $(x,y)$ requires information from both $g$ and $h$, which provides the first clues as to how one might prove, "You need as many equations as variables to find a solution." Linking this back to the "One Equation - One Variable" section, recall that $f^{-1}\big(f(x)\big)=x = \frac{f(x)-b}{\alpha}$ depends on only one function in order to solve.

Hopefully I did not completely botch this question and was able to sufficiently convey what I am after. Any insights would be greatly appreciated. Cheers~