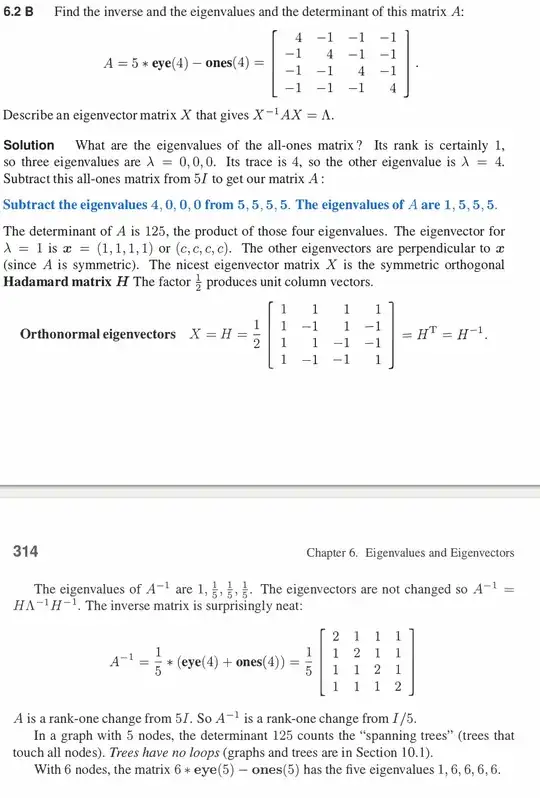

In the blue bolded line, the author claims that because A = 5 eye(4) - ones(4), eigenvalues(A) = eigenvalues(5 eye(4)) - eigenvalues(ones(4)) .

$Ax = \lambda_A x$

$(5*eye(4) - ones(4)) x = \lambda_A x$

$(\lambda_{5*eye(4)} - \lambda_{ones(4)})x = \lambda_A x$

The jump between the previous two equalities is only possible if the eigenvectors corresponding to the eigenvalues of 5*eye(4) and ones(4) are the same, right? So why didn't the person who wrote this solution make that argument? I do not think in general it is possible to add eigenvalues of summands to get the eigenvalues of a sum like the solution author is doing.