This question is similar to this but I cant find what goes wrong in my answer. The full statement of problem is this:

If a 1 meter rope is cut at two uniformly randomly chosen points (to give three pieces), what is the average length of the largest piece?

What I am doing:

|-------|------|------|

0 x y 1

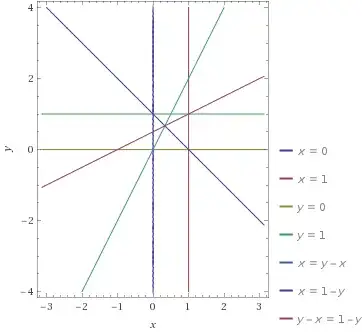

I assume rod as of being length 1 unit and divide it at distance $x$ from left and another cut is at distance $y$ from left ($y > x$). Then I take two cases:

If $x \ge \frac{1}{2}$ then wherever we cut $y$ we always get $x$ as largest. So expectation is $$\int_{1/2}^{1}x(1-x) dx = \frac{1}{12}$$

If cut is made $\frac{1}{3} \le x \le \frac{1}{2}$ then $y$ may lie only in region $1-x$ to $2x$ for leftmost part to be largest. Then we have $$\int_{1/3}^{1/2} x(3x-1) dx = \frac{1}{54}$$

So i thought expected length to be $1/12 + 1/54 = \frac{11}{108}$ but this is very wrong correct result is $11/18$