A probability measure is typically defined as a function $\mathbb{P}: \mathcal{F} \rightarrow [0, 1]$, where $\mathcal{F}$ is a $\sigma$-algebra, i.e. a set of events (which are themselves sets of outcomes), so $\sigma$-algebras are sets of sets.

Now, it's often the case that one defines the Gaussian p.d.f. (or just Gaussian function, i.e. an exponential function) as follows

$$ p(x)=\frac{1}{(2 \pi)^{n / 2} \operatorname{det}(\Sigma)^{1 / 2}} \exp \left(-\frac{1}{2}(x-\mu)^{T} \Sigma^{-1}(x-\mu)\right) $$

When I look at this expression, I think that $x$ is a dummy variable. Now, there are cases where one needs to compute something as a function of a "distribution" (which I assume they mean "probability measure"), e.g. the KL divergence is an example of a function between probability measures, but then the Gaussian p.d.f.s are used to actually compute the KL divergence. Now, I know we can also define the KL divergence between p.d.f.s, but, in the derivation of these notes, the author writes

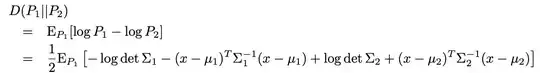

So, he defines the KL divergence $D$ between probability measures (or distributions or whatever they are) $P_1$ and $P_2$ and then he uses the definition of the Gaussian p.d.f. Note that he just took the logarithm of the exponential function, and that should explain the last term there. Now, you can see that this KL divergence is an EXPECTATION. Now, expectations are operators, i.e. functions that take functions, and, more precisely, expectations take random variables as inputs (as far as I know), so the expression inside the expectation

$$ -\log \operatorname{det} \Sigma_{1}-\left(x-\mu_{1}\right)^{T} \Sigma_{1}^{-1}\left(x-\mu_{1}\right)+\log \operatorname{det} \Sigma_{2}+\left(x-\mu_{2}\right)^{T} \Sigma_{2}^{-1}\left(x-\mu_{2}\right) $$

must be a random variable. Given that $\mu_1, \mu_2, \Sigma_1$ and $\Sigma_2$ are constants, $x$ must be the (basic?) random variables. However, above, when we defined the Gaussian pdf, $x$ was a dummy variable (I guess). So, it's not clear what's going on here. First, we have a pdf and then the pdf is a random variable. Can someone clarify this to me? What's being used then to compute the KL divergence? p.d.f.s or random variables? I think they must be random variables, because the KL divergence is defined as an expectation, but then I don't understand the relationship between the Gaussian random variable $p(x)$ and the Gaussian p.d.f. $p(x)$. Is a Gaussian r.v. just defined as a Gaussian p.d.f. where the $x$ is r.v. from the sample space to another measurable space (which one?)?