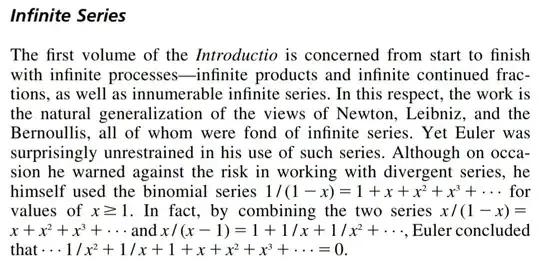

I am reading a book "A History of Mathematics by Boyer" In the chapter about Euler it states that

"Although on occasion he warned against the risk in working with divergent series, he himself used the binomial series"

$1/(1-x) = 1 + x + x^2 + x^3 ...$ for values of $x\geq 1$.

But what about dividing with zero? Isn't it zero when $x =1$ ?