I am working on the following exercise:

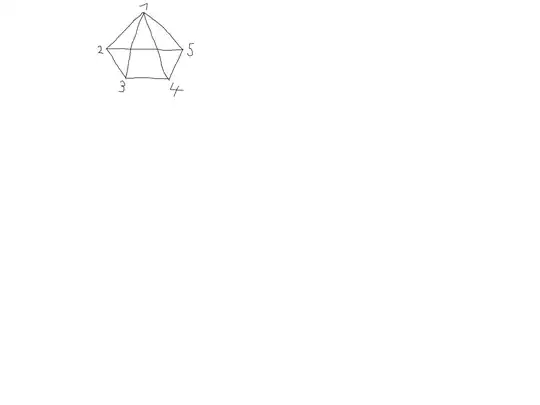

Below is the transition graph of a Markov chain $(X_n)_{n \ge 0}$ where each edge is bi-directional . For each vertex, the probabilities of the out-going edges are uniformly distributed, e.g. the probability of moving from 1 to 3 is 1/4 and from 2 to 5 is 1/3 .

a) Find the stationary distribution.

b) Compute the entropy rate of the stationary Markov chain.

c) Compute the mutual information $I(X_n; X_{n−1})$ assuming the process is stationary.

My attempt:

a) The first thing I did was writing down the transition matrix $P$ as:

$$ \begin{pmatrix} &0 &1/4 &1/4 &1/4 &1/4 \\ &1/3 &0 &1/3 &0 &1/3 \\ &1/3 &1/3 &0 &1/3 &0 \\ &1/3 &0 &1/3 &0 &1/3 \\ &1/3 &1/3 &0 &1/3 &0 \\ \end{pmatrix}$$

And I computed the stionary distribution $\nu$ as the left eigenvector of $1$ from $P$ as

$$\begin{bmatrix} 0.5547 &0.4160 &0.4160 &0.4160 &0.4160 \end{bmatrix}.$$

b) For the entropy rate I would just use the formula

$$-\sum_{x,y \in \mathcal{X}} \nu(x) \ p(y \mid x) \ \log_2(p(y \mid x)).$$

c) I do not know what to do here. What should "stationarity" help in computing mutual information? Could you explain this point to me?