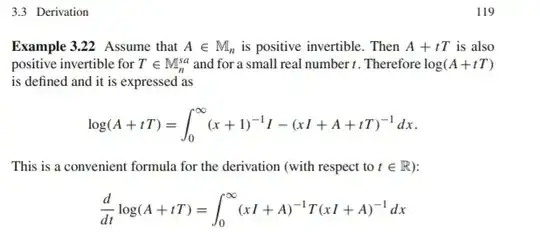

I'm learning about matrices and matrix calculus. In Matrix Monotone Functions and Convexity. In: Introduction to Matrix Analysis and Applications there is written that integral representations of matrices are often helpful with calculating derivaties and there is and example:

I understand the integral representation which follow from spectral theorem and how the derivative is calculated, but I don't understand why we can put $\frac{d}{dt}$ inside the integral. How to prove it formally? Does it somehow follow from Taylor expansion of the inverse?

EDIT

My idea is to put $f(t) = \log{(A + tT)}$ and show that

$$\left| \frac{f(h) - f(0)}{h} - \int_0^\infty (xI+A)^{-1}T(xI+A)^{-1}\right| \to 0$$

By the first formula LHS is equal to

$$\left| \int_0^\infty \frac{(xI+A+hT)^{-1} - (xI+A)^{-1}}{h} - (xI+A)^{-1}T(xI+A)^{-1}\right| = \left| \int_0^\infty \sum_{n=2}^\infty (-h)^{n-1} (xI+A)^{-\frac{1}{2}}\left((xI+A)^{-\frac{1}{2}}T(xI+A)^{-\frac{1}{2}}\right)^n(xI+A)^{-\frac{1}{2}}\right| \le \left| h \int_0^\infty \frac{\left\|T\right\|^2}{\left\|xI+A\right\|^3}\sum_{n=0}^\infty \left(\frac{h\left\|T\right\|}{\left\|xI+A\right\|}\right)^n \right|$$

where I used Taylor expansion and bounded the series by operator norms (assuming they are finite...). Now if $h$ is very small, the series is uniformly convergent and also integral is finite. Is it more or less fine?