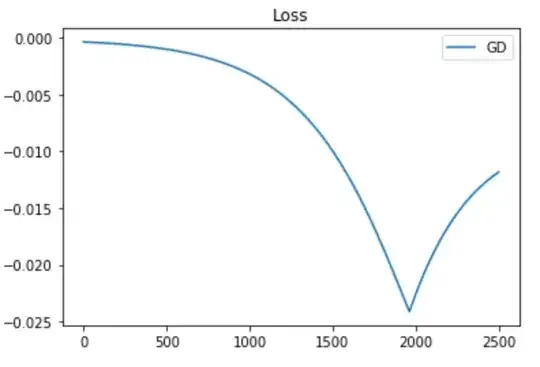

I'm running gradient descent on a continuous function and I observe this pattern:

What can cause such a sudden kink? Why does the Loss keep increasing after it? I understand issues related to a learning rate that is too large, but this does not seem to be the case.

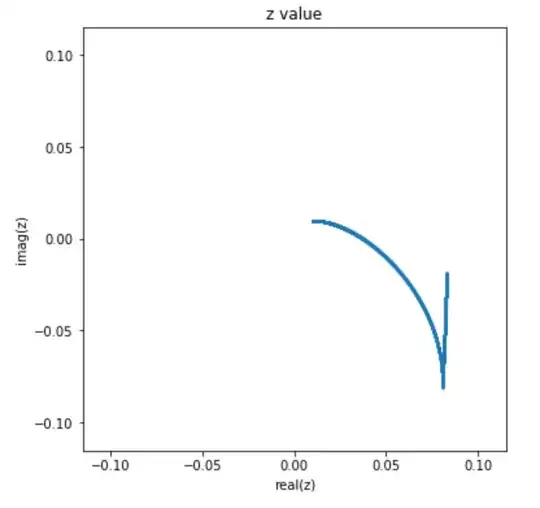

The parameter also turns abruptly (it's a complex-valued parameter, or we can think of it as a pair of parameters):

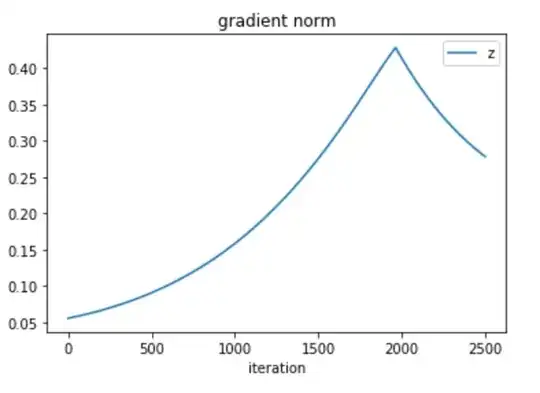

For completeness, here's the norm of the gradient (there's no large jump as if there was a cliff, see fig. 8.3 pag. 285)