Does anyone know how $dxdy$ becomes $rdrd\theta$ in the example below? I always end up with cosines and sines in the expression no matter how I go about it and I'm not sure how they are disappearing from the expression.

-

HINT: "r" is the Jacobian determinant... – JG123 Nov 25 '19 at 22:31

-

2It is nonsense to write $dx,dy=(-r\sin(\theta),d\theta+\cos(\theta),dr)(r\cos(\theta),d\theta+\sin(\theta),dr)$. – Mark Viola Nov 25 '19 at 22:38

-

@MarkViola It's fine if you put in explicit wedge products. – J.G. Nov 25 '19 at 22:43

-

@J.G. Is wedge products just the generalized name for ijk unit vectors? That had crossed my mind as I was trying to decide whether you should take the derivative of dx and dy with respect to theta or r because it did seem kind of weird to just arbitrarily pick one or the other (or a mix) when calculating dx and dy. – DKNguyen Nov 25 '19 at 23:02

-

@ DKNguyen The wedge product is an operation defined in the exterior algebra. – JG123 Nov 25 '19 at 23:21

-

1@DKNguyen You can apply wedge products on vectors directly, which yields a nice way of describing the "volumes" of higher-dimensional parallelepipeds . You can also have wedge products between "one-forms", such as $dx$ and $dy$, which send vectors in a vector space to a scalar (which is really in a field). – JG123 Nov 25 '19 at 23:25

-

@j.g. Given the nature if the original post, I was trying to address the second statement in which the OP states his/her arriving at expressions that involve trigonometric functions. I suspect that the OP multiplies differentials $dx$ and $dy$. – Mark Viola Nov 26 '19 at 04:57

-

@MarkViola yes. – DKNguyen Nov 26 '19 at 14:17

-

@DKNguyen It is incorrect to simply multiply the differentials. – Mark Viola Nov 26 '19 at 16:40

-

@MarkViola so the dxdy in substitution does not work (on the surface level at least) the same way that it does with a single integral? It's not just doing the single integral substitution procedure twice? – DKNguyen Nov 26 '19 at 17:40

-

@DKNguyen Indeed. We are making a transformation from $(x,y)$ to $(r,\theta)$ – Mark Viola Nov 26 '19 at 18:13

-

@MarkViola I'm kind of wondering why this was never mentioned in school. I made it up to complex analysis and solved multiple integrals along the way without this being mentioned, unless I have just completely forgotten it. I remember the Jacobian being mentioned, but never in a calculus class. – DKNguyen Nov 26 '19 at 18:17

6 Answers

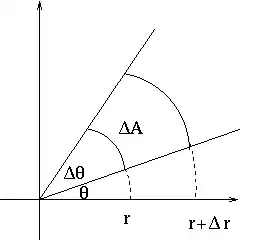

The answers using the Jacobian are (of course) correct. You can get some intuition from this picture:

$\Delta A$ is (approximately) a rectangle with sides $r \Delta \theta$ and $\Delta r$. Its area is proportional to $r$ since it scales as $r$ increases.

Picture from http://citadel.sjfc.edu/faculty/kgreen/vector/block3/jacob/node4.html

- 95,224

- 7

- 108

- 199

-

So what you're saying is that the infintesmal area dxdy when converted to polar coordinates ends up being a rectangle with the sides $dr$ and $rsin(dtheta) \approx rd\theta $ with the small angle approximation. That explains it geometrically, but it doesn't really explain what happens when you want to do a non-geometric substitution (i.e. not a change of coordinates so much as you are just trying to replace either dx or dy or both with something just so you can integrate it). I guess that's where the jacobian comes in? It's generalized form of what's going on? – DKNguyen Nov 26 '19 at 17:47

-

"Just so you can integrate it" implies figuring out the area element in the new coordinate system, in terms of $dx$ and $dy$. It doesn't make sense to think of changing those infinitesimals separately. The Jacobian tells you the whole story. (I don't think you need the $\sin$ and the small angle approximation. The arclength is $rd\theta $.) – Ethan Bolker Nov 26 '19 at 17:57

-

Yes, that's what you are technically doing, but it doesn't seem like you can rely on geometric intuition if you are going through a table of integrals trying to substitute expressions in to get something that you can work with. – DKNguyen Nov 26 '19 at 17:58

-

The single valued integrals you look up in tables are often worked out with substitutions. Those are just one dimensional changes of coordinates 0 the Jacobian is just the derivative that the chain rule provides. I don't know of any tables of multivariable integrals. – Ethan Bolker Nov 26 '19 at 18:03

-

Oh yeah. I guess you don't need the small angle approximation since the arc length is just $$\frac{\theta}{2\pi} \times 2\pi r$$ – DKNguyen Nov 26 '19 at 18:40

We are using polar coordinates that is

- $x=r \cos \theta$

- $y=r \sin \theta$

and by the Jacobian determinant we have that

$$dxdy=\begin{vmatrix}\cos\theta&-r\sin\theta\\\sin \theta&r\cos\theta\end{vmatrix}drd\theta=rdrd\theta$$

Refer also to the related

- 154,566

-

I mean...I see how the determinant is the case, but how is the matrix suddenly just being pulled into this integral in the first place? – DKNguyen Nov 25 '19 at 22:35

-

@DKNguyen It represens the determinant for the Jacobian that is $$r\cos^2 \theta+r\sin^2 \theta= r(cos^2 \theta+rsin^2 \theta)=r$$ – user Nov 25 '19 at 22:37

-

1@ DKNguyen It is a standard theorem from vector calculus that when changing coordinates, say from $(x,y)$ to $(u,v)$, one must tack on the determinant of the Jacobian $\textrm{det}(\textrm{D}T)$ in front of the differentials $dudv$, where T is the map that takes $(u,v)$ to $(x,y)$. Of course, this result holds beyond the $\mathbb{R^2}$ case. – JG123 Nov 25 '19 at 22:42

-

@DKNguyen Are you asking for the geometric reason why the change of variables formula works? – JG123 Nov 25 '19 at 22:50

-

@JG123 Hmmm. Okay. So what I'm hearing is that when doing integration by substitution for single integrals, there is some geometric interpretation of the differential that is unaddressed (or rather does not need to be explicitly addressed). Whereas in multiple integrals it does need to be addressed and whatever it is cannot be derived with just "what's in front of you"? – DKNguyen Nov 25 '19 at 22:51

-

@JG123 No, I'm asking why do you have to take a detour in matrixes and the Jacobian for that. That entire section of the textbook is about why the change of variables works for integration by substitution but then for that example, that little step just came out of left field. And from the answers, it seems like it's even more out of left field than I thought. – DKNguyen Nov 25 '19 at 22:51

-

Either that, or me trying to do dxdy=(−rsin(θ)dθ+cos(θ)dr)(rcos(θ)dθ+sin(θ)dr) like Mark Viola mentioned up above in comments to the question has something fundamentally unsound in it. I was just trying to follow the same steps for integration by substitution with single variables, applied twice. Does that hold any meaning here? – DKNguyen Nov 25 '19 at 22:53

-

@DKNguyen For an intuition on that, by the change of coordinates we modify the infinitesimal area $dA=dxdy$ by a factor that we van evaluate by Jacobian determinant, that is assuming that the transformation is locally linear. – user Nov 25 '19 at 22:55

-

1For the single variable case, (like u-substitution), you are still taking the determinant of Jacobian matrices. However, the Jacobian, in this case, is a 1x1 matrix whose entry is $\frac{dx}{du}$ so the determinant is just $\frac{dx}{du}$. – JG123 Nov 25 '19 at 22:57

-

@JG123 Hmm. That was something I was unaware of. It seems I have stepped on a landmine. I'll have to go to my earlier calculus book and see if it mentions that at all. – DKNguyen Nov 25 '19 at 22:58

-

@DKNguyen I would be surprised if single-variable calculus books mention it. Adding on to user's answer, we approximate the transformation T by its best linear approximation, say H. The idea is that, in general, T sends infinitesimal rectangles in $(u,v)$ space to irregular/curved shapes in $(x,y)$ space that are closely approximated by the infinitesimal parallelograms that H sends infinitesimal rectangles in $(u,v)$ space to. – JG123 Nov 25 '19 at 23:02

-

@DKNguyen The area of these parallelograms is $\textrm{det}(\textrm{D}T)dudv$, where $dudv$ is the area of the infinitesimal rectangles in $(u,v)$ space . Therefore, $\textrm{det(DT)}$ is the "area distortion" factor between the parallelogram in $(x,y)$ space and the rectangle $dudv$ in (u,v) space. Similarly, $\frac{dx}{du}$ is the "length distortion" factor between the infinitesimal lengths $du$ and $dx$. – JG123 Nov 25 '19 at 23:03

-

@JG123 Well, the earlier calculus book I had in mind is a 2D and 3D calculus book. I know my single variable calculus book doesn't have it. – DKNguyen Nov 25 '19 at 23:04

-

@JG123 Well, the Jacobian is mentioned later in the same book that the example was from. So I guess we were just meant to not think too hard about it or notice it since that the example in the question was from appeared in the first chapter of the book. – DKNguyen Nov 25 '19 at 23:12

Perhaps seeing the two compared will help.

$\underline{\bf\text{1D Case:}}$

$$ \int_{a}^{b}f(x)dx=\int_{\alpha}^{\beta}f(x(u))\frac{dx}{du}du $$

$\underline{\bf\text{2D Case:}}$

$$ \iint_{D}f(x,y)dxdy=\iint_{D’}f(x(u,v),y(u,v))\Bigl\vert\frac{\partial{(x,y)}}{\partial{(u,v)}}\Bigr\vert{}dudv $$

As for where the actual matrix comes from:

When we transformed our coordinates from Cartesian to polar, we also transformed the differential elements $dx$ and $dy$. By doing this, we are no longer guaranteed that they are perpendicular; therefore, we are no longer guaranteed that $da=dxdy$. To find our true $da$, let's consider the following equations:

$$ \begin{align} dx&=\frac{\partial{x}}{\partial{r}}dr+\frac{\partial{x}}{\partial{\theta}}d\theta\\ dy&=\frac{\partial{y}}{\partial{r}}dr+\frac{\partial{y}}{\partial{\theta}}d\theta \end{align} $$

or

$$ \begin{pmatrix} dx\\ dy \end{pmatrix} = \bbox[yellow,5px] { \begin{pmatrix} \partial{x}/\partial{r} & \partial{x}/\partial\theta\\ \partial{y}/\partial{r} & \partial{y}/\partial\theta \end{pmatrix} } \begin{pmatrix} dr\\ d\theta \end{pmatrix}\\ \text{The highlighted matrix ends up being the Jacobian Matrix.} $$

This shows us the form that the basis vectors take under the transformation. Previously, we had $\overrightarrow{dx'}=dx\hat{e}_x= \begin{pmatrix} 1\\ 0 \end{pmatrix} dx\, $ and $\,\overrightarrow{dy'}=dy\hat{e}_y= \begin{pmatrix} 0\\ 1 \end{pmatrix} dy $ , with $da=\Vert{\overrightarrow{dx'}\times\overrightarrow{dy'}}\Vert=dxdy$ . However, now we have $\overrightarrow{dx}= \begin{pmatrix} \partial{x}/\partial{r}\\ \partial{y}/\partial{r} \end{pmatrix} dr $ and $\overrightarrow{dy}= \begin{pmatrix} \partial{x}/\partial\theta\\ \partial{y}/\partial\theta \end{pmatrix} d\theta $ , with $$da=\Vert{\overrightarrow{dx}\times\overrightarrow{dy}}\Vert=\bbox[yellow,5px]{\Bigl(\frac{\partial{x}}{\partial{r}}\frac{\partial{y}}{\partial{\theta}}-\frac{\partial{x}}{\partial{\theta}}\frac{\partial{y}}{\partial{r}}\Bigr)}drd\theta\text{.}\\ \frac{\partial{x}}{\partial{r}}=\cos\theta\,\text{,}\,\frac{\partial{y}}{\partial{\theta}}=r\cos\theta\,\text{,}\,\frac{\partial{x}}{\partial{\theta}}=-r\sin\theta\,\text{,}\,\frac{\partial{y}}{\partial{r}}=\sin\theta\,\text{, so the highlighted portion equals }r\text{.}\\ \therefore{} da=\Vert{\overrightarrow{dx}\times\overrightarrow{dy}}\Vert=rdrd\theta $$ Also notice that this highlighted quantity is equal to the determinant of the previously highlighted matrix. Again, it turns out that this is what we call the Jacobian Matrix, and calculating the Jacobian Determinant uncovers how the coordinate transformation affected the differential area element.

$$ \frac{\partial{(x,y)}}{\partial{(u,v)}}= \begin{vmatrix} \partial{x}/\partial{u} & \partial{x}/\partial{v}\\ \partial{y}/\partial{u} & \partial{y}/\partial{v} \end{vmatrix} =\frac{\partial{x}}{\partial{u}}\frac{\partial{y}}{\partial{v}}-\frac{\partial{x}}{\partial{v}}\frac{\partial{y}}{\partial{u}} $$

Taylor series mandate $dx^2=0$, from which you can show $dxdy=-dydx$ etc. (Look up the wedge product on differential fotms.) Thus$$\begin {align}dxdy&=(\cos\theta dr-r\sin\theta d\theta)(\sin\theta dr+r\cos\theta d\theta)\\&=r(\cos^2\theta+\sin^2\theta)drd\theta.\end{align}$$In particular, the $drd\theta$ coefficient is a determinant called the Jacobian.

Note, however, the calculation $ds^2=dx^2+dy^2=dr^2+r^2d\theta^2$ takes infinitesimals as commuting, because this time there's no nilpotency axiom, so we're not working with the wedge product on differential forms.

- 115,835

(I don't have enough rep to leave a comment, sorry)

To my knowledge, the Jacobian matrix represents the best approximation (differential) of our Cartesian points represented as polar coordinates. When you find its determinant, you are finding how the Jacobian scales area/volume.

Differential element has area

$dA= dx \cdot dy$ in Cartesian system for a rectangle and

$dA= r d\theta \cdot dr$ in Polar coordinates for the trapezium.

Evaluation of Jacobian matrix $((u_x,u_y), (v_x,v_y))$ leaves a multiplier of just $r,$ between $[(u,v)-(x,y)]$ systems.

- 40,495