Let us define the two functions

$$

\DeclareMathOperator{\fl}{fl}

f(x) = \frac{1-\cos(x)}{x^2}

\quad \text{and} \quad

g(y) = \frac{1 - y}{\arccos(y)^2}

\,.

$$

We have that

$$

f(x) = g(\cos(x))

\,.

$$

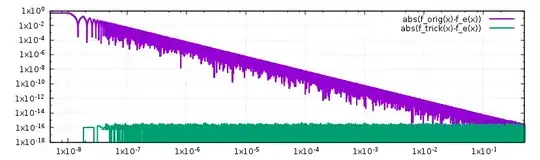

When you evaluate $f(x)$ for $x = 10^{-7}$ catastrophic cancellation occurs for the following reason. The numerator is evaluated by computing $1 - \fl(\cos(x))$ (where $\fl$ denotes rounding to a floating point number). Since $\cos(10^{-7}) \approx 1$ many digits in the result cancel and error due to the digits that are lost, by rounding the value of $\cos(x)$, become significant. Thus, the overall result has a low accuracy.

At first sight, it looks like the same is true, if we first compute $y = \fl(\cos(x))$ and then computing $g(y)$. We are again subtracting two very similar numbers when evaluating $g$, hence cancellation should occur. This is, however, not the case.

First of all note that $g$ does not vary a lot at $y = 1$, because

$$

\lim_{y \to 1} g'(y) = \tfrac{1}{12}

\,.

$$

Thus, if we perturb the input, the output will only change slightly, i.e., if $\bar{y} \approx y$ then $g(\bar{y}) \approx g(y)$.

Now, let $\bar{y} := \fl(\cos(x))$. The number $\bar{y}$ is (by definition) exactly represented by a floating point number. When a floating point computation unit subtracts two floating point numbers, it computes a result that is precise up to machine precision. Catastrophic cancellation occurs, when the numbers have to be rounded first.

To make this behavior clear, consider the following example. Assume we want to compute

$$

1.000 - 0.999

$$

using four digits of accuracy. It turns out, that three leading digits of the result are zero. To compute the remaining digits, the computer assumes, that the two numbers are given exactly by floating point numbers, i.e., all the remaining digits are zero. Thus we can compute

$$

1.000000 - 0.999000 = 0.001000 = 1.000 \cdot 10^{-3}

\,.

$$

In this case, the result is even exact.

However, if we instead intended to compute

$$

1.000431 - 0.999001 = 1.430 \cdot 10^{-3}

\,,

$$

in four digits accuracy the computer would have done the same thing and the values that the computer would produce would be very inaccurate.

Thus, by first computing a floating point representation $\bar{y} \approx \cos(x)$ and then computing $g(\bar{y})$ we feed the computation algorithm with a number that is exactly represented by a floating point value. Hence, the subtraction is precise up to machine precision. The only significant error that we are making is the error that results from computing $g(\bar{y})$ instead of $g(y)$.