Let $(X_n)$ be a sequence of i.i.d $\mathcal N(0,1)$ random variables. Define $S_0=0$ and $S_n=\sum_{k=1}^n X_k$ for $n\geq 1$. Find the limiting distribution of $$\frac1n \sum_{k=1}^{n}|S_{k-1}|(X_k^2 - 1)$$

This problem is from Shiryaev's Problems in Probability, in the chapter on the Central Limit Theorem. It was asked on this site in 2014, but remains unanswered. I posted it yesterday on Cross Validated, and I think it's worth to cross-post it here as well.

Since $S_{k-1}$ and $X_k$ are independent, $E(|S_{k-1}|(X_k^2 - 1))=0$ and $$V(|S_{k-1}|(X_k^2 - 1)) = E(S_{k-1}^2(X_k^2 - 1)^2)= E(S_{k-1}^2)E((X_k^2 - 1)^2) =2(k-1)$$

Note that the $|S_{k-1}|(X_k^2 - 1)$ are clearly not independent. However, as observed by Clement C. in the comments, they are uncorrelated since for $j>k$ $$\begin{aligned}Cov(|S_{k-1}|(X_k^2 - 1), |S_{j-1}|(X_j^2 - 1)) &= E(|S_{k-1}|(X_k^2 - 1)|S_{j-1}|)E(X_j^2 - 1)\\ &=0 \end{aligned}$$

Hence $\displaystyle V(\frac1n \sum_{k=1}^{n}|S_{k-1}|(X_k^2 - 1)) = \frac 1{n^2}\sum_{k=1}^{n} 2(k-1) = \frac{n-1}n$ and the variance converges to $1$.

I have run simulations to get a feel of the answer

import numpy as np

import scipy as sc

import scipy.stats as stats

import matplotlib.pyplot as plt

n = 30000 #summation index

m = 10000 #number of samples

X = np.random.normal(size=(m,n))

sums = np.cumsum(X, axis=1)

sums = np.delete(sums, -1, 1)

prods = np.delete(X**2-1, 0, 1)*np.abs(sums)

samples = 1/n*np.sum(prods, axis=1)

plt.hist(samples, bins=100, density=True)

plt.show()

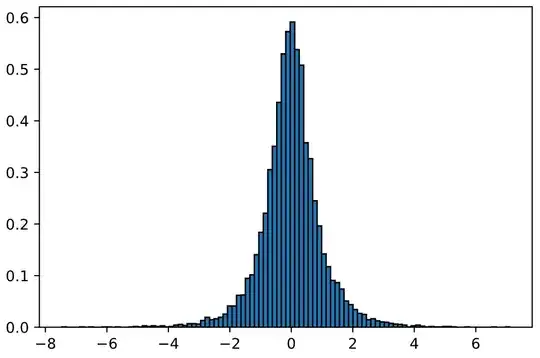

Below is a histogram of $10.000$ samples ($n=30.000$). The variance from the generated samples is $0.9891$ (this complies with the computations above). If the limiting distribution was $\mathcal N(0,\sigma^2)$, then $\sigma=1$. However the histogram peaks at around $0.6$, while the max of the density of $\mathcal N(0,1)$ is $\frac 1{\sqrt{2 \pi}}\approx 0.4$. Thus simulations suggest that the limiting distribution is not Gaussian.

It might help to write $|S_{k-1}| = (2\cdot 1_{S_{k-1}\geq 0} -1)S_{k-1}$.

It might also be helpful to note that if $Z_n=\frac1n \sum_{k=1}^{n}|S_{k-1}|(X_k^2 - 1)$, conditioning on $(X_1,\ldots,X_{n-1})$ yields $$E(e^{itnZ_n}) = E\left(e^{it(n-1)Z_{n-1}} \frac{e^{-it|S_{n-1}|}}{\sqrt{1-2it|S_{n-1}|}}\right)$$

$$ \frac{1}{n} \sum_{k=1}^{n} S_{k-1} X_k $$

converges in distribution to the Ito integral

$$ \int_{0}^{1} W_s , \mathrm{d}W_s = \frac{W_1^2 - 1}{2}, $$

where $(W_t)_{t\geq 0}$ is a Wiener process. Since $W_1 \sim \mathcal{N}(0, 1)$, this limiting distribution cannot be gaussian. Now I suspect that OP's problem also suffers the same issue.

– Sangchul Lee Aug 24 '19 at 23:40