Letting $E[X]= E[X^2] - (E[X])^2$, can anyone explain why $E[X^2]$ is simply the integral over $x^2f(x)$ instead of $f(x)^2$ ?

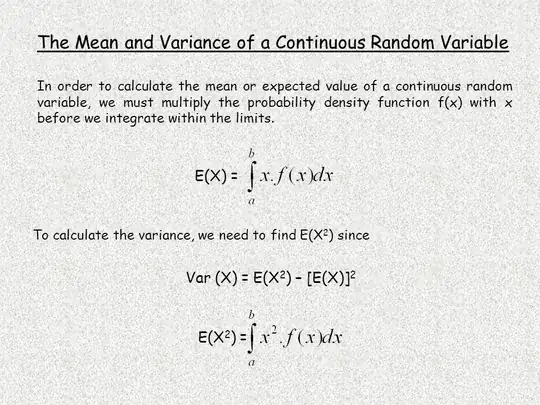

For example in the below picture:

Why isn't the last line $f(x)^2$ instead of $x^2f(x)$ since $X$ simply describes $f(x)$? It doesn't make sense as it takes the expectation outside the domain of $X$ if it has finite bounds.