Continuing my hobby from here of trying to find simple convolutional kernels which build families with interesting properties, today I found a new one. Consider the matrix:

$$T=\begin{bmatrix}1&2&-3\\3&2&3\\-3&2&1\end{bmatrix}$$

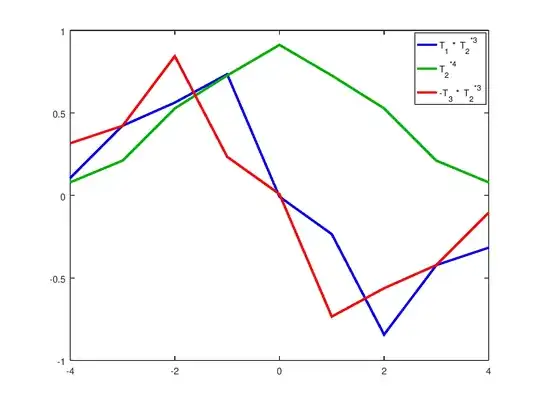

We can see first and third rows are meanless and only one zero crossing. This make them in some sense derivative-approximators. However they are clearly skewed. The top one to the right and the bottom to the left. If we as usually in signal processing is done iterate low pass filter a few times for each row, we get the following convolution kernels:

Green is the low-pass channel, a weighted average bell-like curve. Blue is first order derivative of kind one, slightly "diffused" to the right. Red is first order derivative of kind two, slightly "diffused" to the left.

Now to the fun part! :D

$$T^2 = \begin{bmatrix}16&0&0\\0&16&0\\0&0&16\end{bmatrix} = 16 I $$

In other words $$\frac T 4 = \left(\frac T 4\right)^{-1}$$

Except for the obvious simplicity of implementing an inverse-transform, what does this entail? Does it affect the properties of filters relation to each other?