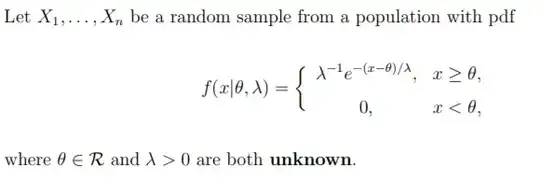

I'm attempting to find

$(a)$ The UMVUE of $\lambda$ when $\theta$ is known.

$(b)$ The UMVUE of $\theta$ when $\lambda$ is known.

$(c)$ The UMVUE of $P(X_1 \ge t)$ for a fixed $t > \theta$ when $\lambda$ is known.

I'm new to the concept of UMVUE and attempting to self-learn it through a mathematical statistics textbook. I would appreciate some feedback for $(a)$ and $(b)$ in terms of their correctness and some help with $(c)$.

I found a sufficient statistic $T = (X_{(1)}, \sum\limits_{i = 1 }^n {X_i })$

For $(a)$, when $θ$ is known, $\sum\limits_{i = 1 }^n {X_i }$ is a sufficient and complete statistic for $λ$.

$E(\sum\limits_{i = 1 }^n {X_i }) = n(\lambda + \theta)$

Therefore $T_1 = \frac{\sum\limits_{i = 1 }^n {X_i }}{n} - \theta = \bar X - \theta$ is the UMVUE of $\lambda$.

For $(b)$, when $\lambda$ is known, $X_{(1)}$ is sufficient and complete for $\theta$.

$E(X_{(1)}) = \lambda + \theta$. Therefore $T_2 = X_{(1)} - \lambda$ is the UMVUE of $\theta$.

For $(c)$, I'm not completely sure how to go about doing this but I'm assuming that the UMVUE would be $P(X_1 \ge t\mid T)$ and that it would be 1 when $t<X_{(1)}$ but I'm unsure how to deal with the other case and whether this is indeed correct.