Let $L = \lim_{x \rightarrow a^+} \frac{f'(x)}{g'(x)}$.

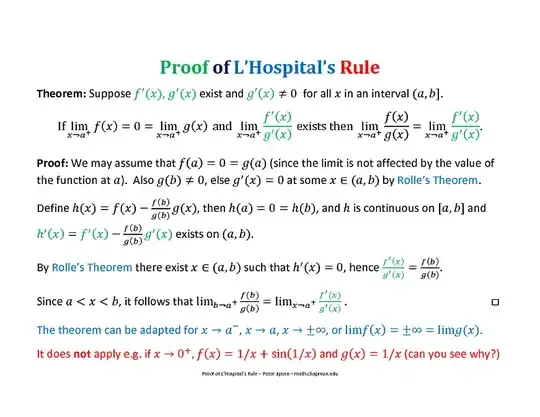

The argument shows that there is a $B > a$ such that for every $b \in (a,a+B)$, there is $a < x < b$ such that $\frac{f'(x)}{g'(x)} = \frac{f(b)}{g(b)}$. In particular, for every $\epsilon > 0$, there is a $\delta > 0$ such that for at least one point $x$ in the interval $(a,a+\delta)$, we have $|\frac{f(x)}{g(x)}- L| < \epsilon$. So if $\lim_{x \rightarrow a^+} \frac{f(x)}{g(x)}$ exists, it must be equal to $L$.

But where is the argument that $\lim_{x \rightarrow a^+} \frac{f(x)}{g(x)}$ exists? That's the hard part, of course.

Added: After more thought I realized that argument is correct and that it really is the usual argument, just presented (i) without enunciating the Cauchy Mean Value Theorem in advance (thanks to @boywholived) and (ii) without being as explicit in some details. In particular, as $b$ approaches $a$ from the right, $\frac{f'(x)}{g'(x)}$ approaches $L$, hence so does $\frac{f(b)}{g(b)}$.

The only limitation I now see is that L'Hopital's Rule also has a version in which $\lim_{x \rightarrow a^+} g(x) = \infty$, and the proof for that really is a bit different. (This limitation is alluded to at the end of the excerpted passage.) You can see $\S$ 7.1 of these notes for the full proof, which is taken directly from Rudin's Principles.

(I now have to figure out why I had trouble seeing that before; my initial answer is rather embarrassing. Or turned around, the technique employed in the standard argument is actually rather interesting: it is like a squeezing argument but with the limit in the middle existing and determining that the outer limit exists. It is clear that my mind rebels against this argument a bit: if you compare Rudin's treatment to the one in my notes, you'll see that mine is not copied directly but replaces the "as X approaches Y" business with explicit inequalities. I wonder if it is used elsewhere...)

(Still Later: Rather, the change is that the squeezing takes place in the independent variable -- but in the correct order -- rather than in the dependent variable: if $\lim_{x \rightarrow a^+} u(x) = L$ and $v(x)$ is a function such that for all $x \in (a,a+\Delta)$,

$v(x) = u(y)$ for some $a < y < x$, then $\lim_{x \rightarrow a^+} v(x) = L$.)