Based on a random sample (6.3, 1.8, 14.2, 7.6) use the method of maximum likelihood to estimate the maximum likelihoods for $\theta_1$ and $\theta_2$.

$$f_y(y;\theta_1, \theta_2) = \frac{1}{\theta_2- \theta_1} \;, \quad \theta_1 \le \theta_2$$

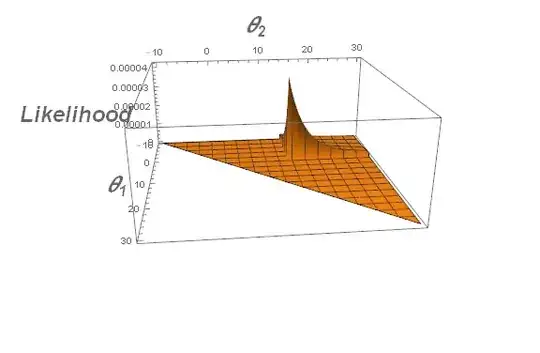

$$L(\theta_1, \theta_2) = \prod_\limits{i=1}^{n}\frac{1}{\theta_2-\theta_1} \\ = \frac{1}{(\theta_2- \theta_1)^n}\prod_\limits{i=1}^{n}1(\theta_1 \le y_i \le \theta_2) \\ = \frac{1}{(\theta_2- \theta_1)^n}\prod_\limits{i=1}^{n}1(\theta_1 \le y_i)1(y_i \le \theta_2) \\ \text{Let } T = \ln[L(\theta_1, \theta_2)] = -n \ln(\theta_2 - \theta_1) + \sum_\limits{i=1}^n\ln(1(\theta_1 \le \min_i(y_i))1(\max_i(y_i) \le \theta_2)) \\ \begin{cases} -\infty, & \text{if } \theta_1>\min_i(y_i) \text{ or } \theta_2 < \max_i(y_i) \\ -n\ln(\theta_2 - \theta_1), & \text{otherwise} \end{cases} $$

now take the derivative with respect to one of them

$$\frac{\partial{T}}{\partial{\theta_2}} = \frac{-n}{\theta_2 - \theta_1} \\ = \frac{n}{\theta_1 - \theta_2}$$

To maximise this we want the numerator magnitude to be as small as possible, so we set $\theta_2 = \max_i(y_i)$

and for $\theta_1$

$$\frac{\partial{T}}{\partial{\theta_1}}=\frac{n}{\max_i(y_i) - \theta_1}$$

To maximise this, we want $\theta_1 = \min_i(y_i)$

This implies $\theta_1 = 1.8$ and $\theta_2 = 14.2$

If someone could check my correctness particularly around the indicator functions because I'm new to those and anything else you can see wrong in math or formatting.

Actually I think that stuff in yellow directly above is not right. I'm not equating the derivative to 0. I think I got the correct answer regardless. Probably more preferably is to look at the

$$-n\ln(\theta_2 - \theta_1)$$

And know to minimise the value in the brackets will maximise the $\ln[L(\theta_1, \theta_2)]$ function, and coming to the same result that I did illegitimately.

Implies that $\hat{\theta_1} = 1.8$ and $\hat{\theta_2}=14.2$

\begin{align} L(\theta\mid y_1,y_2,\ldots,y_n)&=\frac{1}{(\theta_2-\theta_1)^n}\mathbf1_{\theta_1<y_1,\ldots,y_n<\theta_2} \&=\frac{1}{(\theta_2-\theta_1)^n}\mathbf1_{\theta_1<\min y_i,,,\max y_i<\theta_2} \end{align}

Clearly, the likelihood function is maximized when $\theta_2-\theta_1$ is minimized.

Can you now justify why $L(\theta\mid y_1,\ldots,y_n)$ is maximized at $(\theta_1,\theta_2)=\left(\min\limits_{1\le i\le n} y_i,\max\limits_{1\le i\le n} y_i\right)$?

– StubbornAtom Oct 05 '18 at 13:52