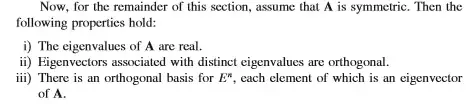

In the appendix of a book on linear and nonlinear optimization, I saw the following statement on symmetric matrices:

In these notes, $A$ is considered as a $n \times n$ square matrix and $E^n$ is the $n$ dimensional Euclidean space. I already know that the repeated eigenvalues of a symmetric matrix corresponds to linearly independent eigenvectors, but they are not necessarily orthogonal. Then how can be the third statement correct, if $A$ has repeated eigenvalues? I think this is a misstatement, but I wonder if there is something I am missing.