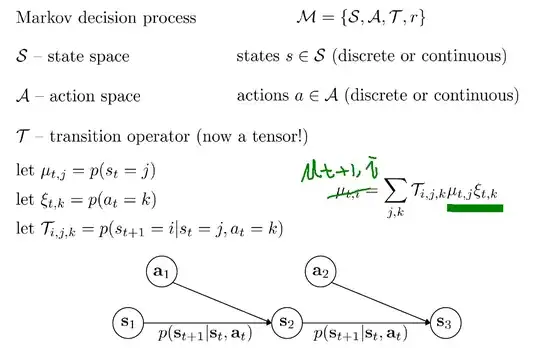

This is a screenshot taken from a lecture about reinforcement learning:

Why the equation marked with green is true? I can see that (from the law of total probability):

$$\mu_{t+1,i} = \sum_{j,k}p(s_{t+1}=i|s_t=j,a_t=k)p(s_t=j,a_t=k)$$

but to get the green one, we need the independence of $s_t$ and $a_t$ to expand $p(s_t=j,a_t=k)$ into $p(s_t=j)p(a_t=k)$.

My question is:

1) is this independence baked into the definition of MDP?

2) if so, how can we apply this to reinforcement learning, I mean, what the agent does in step $t$, $a_t$, clearly depends on the state $s_t$, otherwise the agent is just a dice. are we relying on the fact that, independence is just numerical accident, and it has nothing to do with causality?