With the spectral radius, you are on the right track.

Let us write down what we have:

$$ A = \left( \begin{array}{ccc}

&1 & 2 & 3 \\

&2 & -1 & 2 \\

&3 & 1 & -2 \end{array} \right)$$ and (less importantly) $$b = \left( \begin{array}{c}

5 \\

1 \\

-1 \end{array} \right).$$

So how do we formulate Gauss-Seidel? Note that there are different formulation, but I will do my analysis based on this link, page 1. Let $ A = L+D+U$ be its decomposition in lower, diagonal and upper matrix. Then Gauss-Seidel works as follows:

\begin{align}

(D+L)x^{k+1}&= -Ux^k+b

\\ \Leftrightarrow x^{k+1} &= Gx^k+\tilde{b}

\end{align}

with

$$ G = -(D+L)^{-1} U.$$

Note that you don't actually calculate it that way (never the inverse)! Let $x$ be the solution of the system $Ax=b$, then we have an error $e^k=x^k-x$ from which it follows (see reference above) that

$$ e^{k+1} = Ge^k$$

Thus Gauss-Seidel converges ($e^k\rightarrow 0$ when $k\rightarrow \infty$) iff $\rho(G)<1$. When you have calculated $\rho(G)$ and it is greater than 1, Gauss-Seidel will not converge (Matlab also gives me $\rho(G)>1$).

With the Jacobi method it is basically the same, except you have $A=D+(A-D)$ and your method is

$$ Dx^{k+1} = -(A-D)x^k+b, $$

from which we obtain

$$

x^{k+1} = Gx^k+\tilde{b},

$$

with

$$ G = -D^{-1} (A-D).$$

As before, we have $e^{k+1} = Ge^k$.

We again have $\rho(G)>1$. Therefore, both methods diverge in the given case.

In the following I have done a simple implementation of the code in Matlab.

function iterative_method

A=[ 1 2 3

2 -1 2

3 1 -2];

b=[ 5 1 -1]';

L=[ 0 0 0

2 0 0

3 1 0];

U=[ 0 2 3

0 0 2

0 0 0];

D=[ 1 0 0

0 -1 0

0 0 -2];

x0 = rand(3,1); % random start vector

x = A\b; % "exact" solution

% Gauss Seidel

figure(1)

semilogy(1,abs(x0-x),'xr')

hold on

xi =x0;

for i=2:100

xi = (D+L)((-U*xi)+b);

semilogy(i,abs(xi-x),'xr')

end

% Jacobi

hold off

figure(2)

semilogy(1,abs(x0-x),'ob')

hold on

xi =x0;

for i=2:100

xi = D(-(A-D)*xi+b);

semilogy(i,abs(xi-x),'ob')

end

end

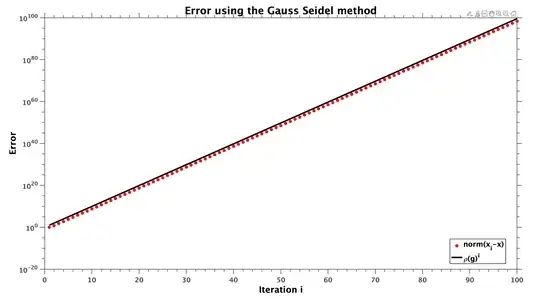

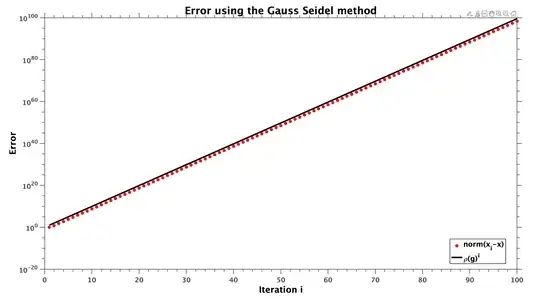

This shows, that both methods diverge as expected.

As we see from $ e^{k+1} = G e^k = G^k e^0$, we have exponential growth in our error.

For comparison, I added $y(\text{iteration number})=\rho(G)^\text{iteration number}$ in black. We can see that this matches the calculated errors.

Bonus

Even though this was no longer asked, I would like to say something about successive over-relaxation (SOR).

This method is a modification of the Gauss-Seidel method from above. But here we introduce a relaxation factor $\omega>1$.

And rewrite our method as follows:

$$ (D+\omega ) x^{k+1} = -(\omega U + (\omega-1)D)x^k+\omega b$$

Normally one wants to increase the convergence speed by choosing a value for $\omega$. It basically means, that you stretch

the step you take in each iteration, assuming your going in the right direction. But in our case we can make use of something similar,

called under-relaxation. Here we take small steps by choosing $\omega<1$.

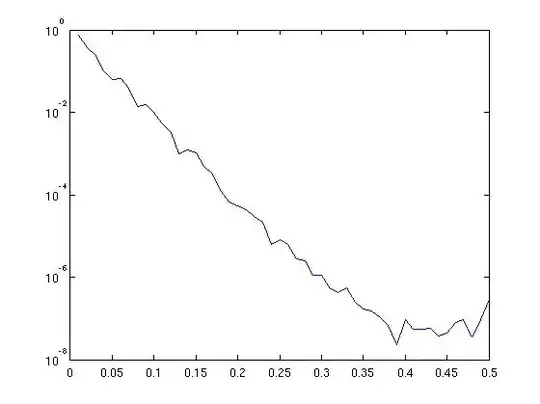

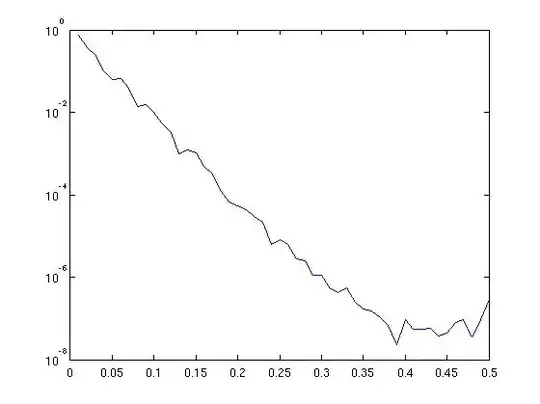

I have done some calculations, playing with different values for $\omega$. The plot below shows the

error of $x^{100}-x$ for different values of $\omega$ on the x-axis, once for $0.01<\omega<2$ and in the second plot

for $0.01<\omega<0.5$. We can see, that for a value of $\omega\approx 0.38$ we get optimal convergence.

Even though this might be a little more than you asked for, I still hope it might interest you to see, that

small modifications in your algorithm can yield different results.