I have encountered these two gradients $\triangledown_{w} w^{t}X^{t}y$ and $\triangledown_{w} w^t X^tXw$, where $w$ is a $n\times 1 $ vector, $X$ is a $m\times n$ matrix and $y$ is $m\times 1$ vector.

My approach for $\triangledown_{w} w^{t}X^{t}y$ was this:

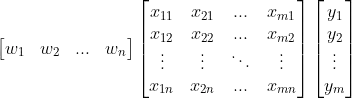

$w^{t}X^{t}y$ =

$= y_1(\sum_{i=1}^{n}w_ix_{1i}) + y_2(\sum_{i=1}^{n}w_ix_{2i}) + ... + y_m(\sum_{i=1}^{n}w_ix_{mi})$ $= \sum_{j=1}^{m}\sum_{i=1}^{n} y_jw_ix_{ji}$

![\frac{\partial }{\partial w_a}\left [\sum_{j=1}^{m}\sum_{i=1}^{n} y_jw_ix_{ji}\right ] = \sum_{j=1}^{m}\sum_{i=1}^{n} y_jx_{ji}\delta_{ia} = \sum_{j=1}^{m}y_jx_{ja}](../../images/51a577861141dbe1f147763c28573086.webp)

And I'm stuck there, not knowing how to convert it to matrix notation. I'm not even sure if it is correct.

How can I get the actual gradient $\triangledown_{w} w^{t}X^{t}y$ out of that partial derivative? Is there an easier way to get the gradient (maybe using some rules, like in ordinary calculus), because this way using summation seems tedious, especially when you have to calculate $\triangledown_{w} w^t X^tXw$?

How do I then work out $\triangledown_{w} w^t X^tXw$ ?