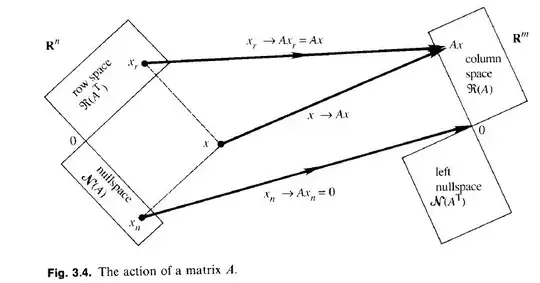

We know that, if $A$ is a linear bounded operator, then $\operatorname{Ker}(A) \perp \operatorname{Range}(A^*)$, where $A^*$ is the adjoint of $A$.

I have no troubles understanding the proof of this (which can be found for example on this answer), however I cannot really understand the intuition behind it.

Is there some way to intuitively understand this result, maybe through geometrical reasoning?