My answer is based on the answer of Mike Spivey on a related question, which uses the probability generating function $G(z)$ of a discrete random variable $X$:

$$G(z) = \sum_{k=0}^{\infty} p(k)z^k $$

where $p$ is the probability mass function of $X$. The probability generating function has the nice property that $E[X] = \lim_{z \to 1-}G'(z)$ (limit from below).

I will adapt Mike's answer for completeness here. Any thoughts would be appreciated, I'm curious to know if my reasoning was correct or if there is a clever way of solving this problem!

We need a specific sequence of seven characters to form the word "COVFEFE". First a "C", then "O", followed by "V" and so on. We can label the event of typing a correct next character to form the word "COVFEFE" with $S$ and the typing of an incorrect character with $F$. The word "COVFEFE" appearing in a long sequence of characters would then be described by the event $A\cdot SSSSSSS = AS^7$, i.e. a sequence $A$ of any character combination without seven $S$ in a row followed by seven $S$ in a row. Many characters will likely appear before we finally get to $AS^7$. Mr. Trump could type out "COVFEFXCOVFEFE" (the event $S^6FS^7$) or "AAACOVFEFE" ($F^3S^7$), for instance. Undoubtedly, all these character sequences will spark a lively debate on social media if mr. Trump is actually tweeting out nuclear launch codes and impending doom is imminent.

We can look at the situation in the following way. Mr. Trump will play a game of typing random characters, one after another, and the game will end when he types seven $S$ in a row. We need to find the expected number of tries before this happens.

Let $X$ be the probality mass function of the number of characters typed before "COVFEFE" appears. To find the expected number of tries, we need to find the probability generating function of $X$. To find the generating function, look at the infinite sum of possible ways to get seven successes in a row on the last seven tries:

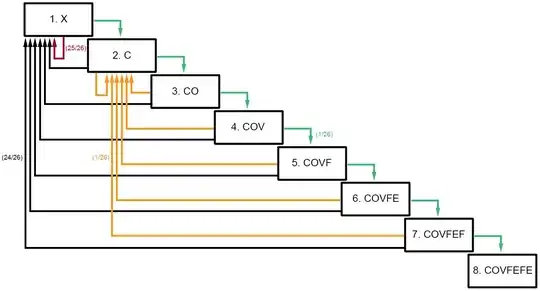

$$S^{7} + FS^{7} + FFS^{7} + SFS^{7} + SSFS^{7} + FSFS^{7} + SFFS^{7} + ...$$

Now, if we were to set $S = pz$ and $F = (1-p)z$, with $p$ the probability of typing the correct next character (and assuming independence), note that we have actually found the probability generating function $G(z)$!

We can rewrite the above expression using a summation and even further simplify using the power sum rule:

$$\sum_{k=0}^{\infty} (F + SF + S^2F + S^3F + S^4F + S^5F + S^6F)^k S^7 = \frac{S^7}{1 - (F + SF + S^2F + S^3 + S^4F + S^5F + S^6F)}$$

If we now substitute $S = pz$ and $F = (1-p)z$, we find:

$$G(z) = \frac{p^7z^7}{1-(1-p)z + (pz)(1-p)z + (pz)^2(1-p)z + (pz)^3(1-p)z + (pz)^3(1-p)z+ (pz)^4(1-p)z + (pz)^5(1-p)z + (pz)^6(1-p)z}$$

At this point we need to find $\lim_{z \to 1-} G'(z)$, which is horrible. I used Wolfram Alpha to do this. The result:

$$E[X] = \frac{1+p+p^2+p^3+p^4+p^5+p^6}{p^7}$$

The probability of typing a correct character is $p = \frac{1}{26}$. Calculating the expected number of characters typed before "COVFEFE" appears, we find $E[X] = 8353082582$. In the case of one character per second, this is the final answer.

Just for fun, I did a little test seeing how many characters I could write out per minute, and it came down to about 300. Mr. Trump says he has pretty big hands, so I'm sure he'll manage 400 CPM. This gives $\frac{8353082582}{400 \cdot 60 \cdot 24 \cdot 365.25}$ which is about 39 years and 8 months, not accounting for sleeping, golf and other responsibilities.

Real answer - The probability of drawing those $7$ letters one after one is $p=\left(\dfrac{1}{26}\right)^7$. It is a geometric random variable, thus the expectation is $\dfrac{1}{p}=26^7$ letters or equivalently $26^7$ seconds, i.e approximately $255$ years.

– Galc127 Dec 04 '17 at 07:30