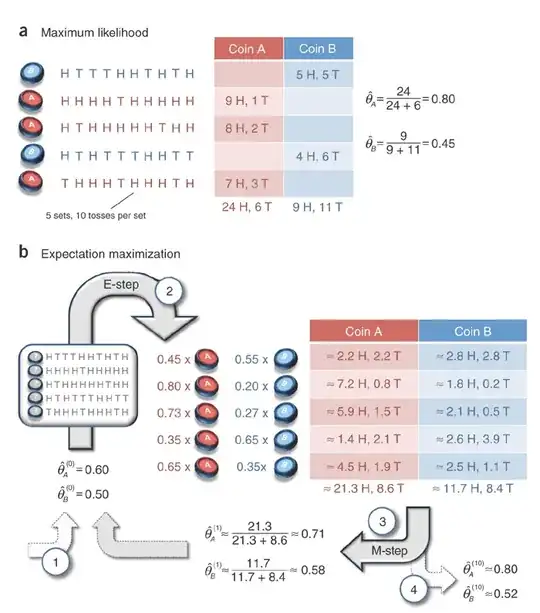

I'm reading a tutorial on expectation maximization which gives an example of a coin flipping experiment (the description is at http://www.nature.com/nbt/journal/v26/n8/full/nbt1406.html?pagewanted=all). Could you please help me understand where the probabilities in step 2 of the process (i.e. in the middle of part b in the below illustration) come from? Thank you.

- EM starts with an initial guess of the parameters. 2. In the E-step, a probability distribution over possible completions is computed using the current parameters. The counts shown in the table are the expected numbers of heads and tails according to this distribution. 3. In the M-step, new parameters are determined using the current completions. 4. After several repetitions of the E-step and M-step, the algorithm converges.