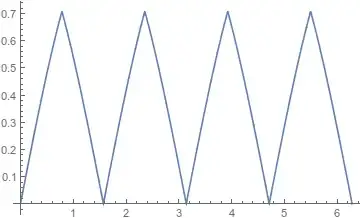

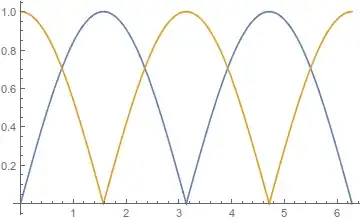

Consider a matrix-valued function $A(t):\mathbb{R}_+ \to \mathbb{R}^{n \times n}$. Suppose that each element $a_{ij}(t)$ is a smooth function with bounded derivative, e.g. $a_{ij}(t)=A_{ij}\sin(\omega_{ij} t)$. Define $f(t)$ as a minimum by absolute value eigenvalue of $A(t)$: $$f(t) = \min_i |\lambda_i\{A(t)\}|.$$

Is it true that $f(t)$ is continuous differentiable and has bounded derivative? If so, then how it can be proven, or in which book/paper it can be found?

Update

Ok, $f(t)$ is continuous, but probably not differentiable. I have revised my main problem and I see that I can alleviate the question. So, now I need $f(t)$ to be Lipschitz.

Actually, what I really need it to show that if for some $t_0 \in [t_a,t_b]$ all eignvalus of $A(t)$ are nonzero, then $$\int_{t_a}^{t_b}\left(\det\{A(s)\}\right)^2ds>0.$$ My intention was to use $\left(\det\{A(t)\}\right)^2=\prod_i^n|\lambda_i\{A(t)\}|^2\ge \left(\min_i |\lambda_i\{A(t)\}|\right)^{2n} = f(t)^{2n}$.

Note also that all $a_{ij}(t)$ are bounded.