The following is an excerpt from Bartle and Sherbert's Introduction to Real Analysis:

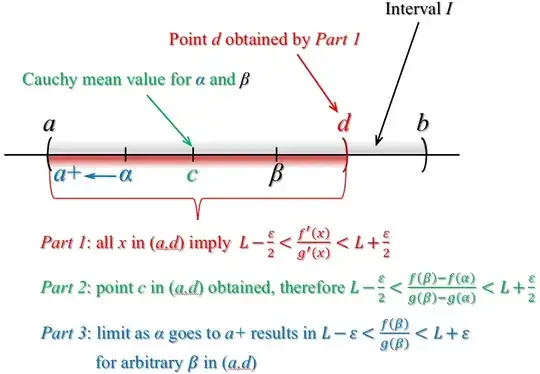

Okay. I am having a little trouble following this proof. What is $c$ and why is it in $(a,b)$? What confuses me is that there is no reference to any $\delta$ nor any reference $x$ such that $0 < x - a < \delta$. They just simply write $L - \epsilon \le \frac{f(\beta)}{g(\beta)} \le L + \epsilon$ and claim that the conclusion follows. I suspect they are using $\beta$ in place of $x$, but this still doesn't account for the absence of a $\delta$ and why $\beta$ wouldn't satisfy $0 < \beta - a < \delta$.

On second thought, perhaps $\beta$ and $c$ are intended to stand for the typically used $x$ and $\delta$, respectively. It seems that what the authors have shown is that for every $\epsilon> 0$, there exists a $c > 0$ such that if $\beta$ satisfies $a < \beta \le c$, then $|\frac{f(\beta)}{g(\beta)} - L| \le \epsilon$. Even on that interpretation, why is $c$ (i.e., $\delta$) in $(a,b)$? In fact, if $a$ and $b$ are negative, this doesn't make much sense...

EDIT:

Okay. I think I follow what you, @Jack, are saying. However, there is one last thing giving me trouble. Just before equation (2), the authors claim that there exists a specific $u \in (\alpha, \beta)$ by the Cauchy Mean Value theorem such that equation (2) holds. Now, in order to validly substitute this in the inequality below (2) to obtain inequality (3), we need that that specific $u$ is in $(a,c)$, but this has not been demonstrated. All that we have is that if $u \in (a,c)$, then $L - \epsilon < \frac{f'(u)}{g'(u)} < L + \epsilon$, but how do we know that the specific $u$, guaranteed to exist by the Cauchy Mean Value theorem, falls in the interval $(a,c)$?

Also, it doesn't seem that we merely want to assume that $\beta \in (a,b)$ but that $\beta$ is arbitrary in $(a,c)$, right? Or may be it doesn't matter, since if we have "$\beta \in (a,b) \implies |\frac{f(\beta)}{g(\beta)} - L | <\epsilon$", then we would clearly have "$\beta \in (a,c) \subseteq (a,b) \implies |\frac{f(\beta)}{g(\beta)} - L | < \epsilon$". Am I thinking about this correctly?