I simply would use the Gâteaux-Derivative. That derivative is the natural expansion of the 1D Derivative

$$\frac{d}{dx}f(x) = \lim_{δx→0}f(x+δx)$$to higher dimensions.

Since your function maps $f:ℝ^n→ℝ$ we need an arbitrary direction $δx∈ℝ^n$, and a small increment $ε>0$. Using that "$|_{ε=0}$ formulation the Gâteaux-Derivative for your function reads

\begin{align*}

d(\|Ax-b\|²;[x,δx]) = (\frac{d}{dε}\|A(x+εδx) - b\|²)\big|_{ε=0}

\end{align*}

First it is

\begin{align*}

\frac{d}{dε}\|A(x+εδx) - b\|² =& \frac{d}{dε}[(A(x+εδx) - b, A(x+εδx) - b)] \\

=&\frac{d}{dε}[\{(Ax, Ax)+ (Ax,Aεδx) + (Ax, -b)\} \\

&+ \{(Aεδx, Ax) + (Aεδx, Aεδx) + (Aεδx, -b)\} \\

&+ \{(-b, Ax) + (-b, Aεδx) + (-b, -b)\} ] \\

=¹&\frac{d}{dε}[\{\|Ax\|²+ \|b\|²+ 2(Ax, -b)\} \\

&+ ε\{2(Ax,Aδx) + 2(-b, Aδx)\} \\

&+ ε²\|Aδx\|² ]\\

=& \{2(Ax,Aδx) + 2(-b, Aδx)\} + 2ε\|Aδx\|².

\end{align*}

¹Sorting by powers of ε.

Setting ε=0, yields

\begin{align*}

(\frac{d}{dε}\|A(x+εδx) - b\|²)\big|_{ε=0} &= 2(Ax,Aδx) + 2(-b, Aδx) \\

&= 2(Ax-b, Aδx)= (2A^\top[Ax-b], δx).

\end{align*}

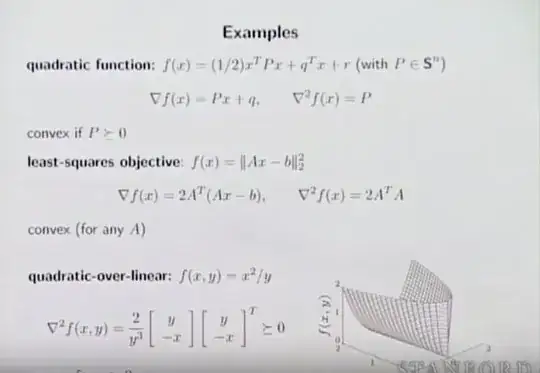

Hence, the derivative is $2A^\top[Ax-b]$.

That is because, $∇f = (∂_{e_1}f, ∂_{e_2}f, …)^T$. So replacing δx with $e_i$ gives: $$∂_{e_i} = {2A^\top[Ax-b]}_i.$$

Higher derivatives can be calculated in the same way:

\begin{align*}

\frac{d}{dε}(2A^\top[A(x+δxε-b])\big|_{ε=0} &= (2A^\top Aδx)\big|_{ε=0} \\

&=2A^\top Aδx

\end{align*}

$⇒∇^2f(x) = 2A^\top A.$