New Question: Based on this answer by Arturo Magidin, it appears that the initial eigenvectors of each chain are always started from whatever independent eigenvectors that exist to begin with, so now I'm confused about how you know that all of the independent eigenvectors for a repeated eigenvalue will exist within the chain of an independent eigenvector for that eigenvalue. That is to say my question is exactly the proof of theorem 7 asked below. Why does one know that there will be n eigenvectors in an $n\times n$ matrix, and exactly the same number of eigenvectors as repeated roots in the chain of the independent regular eigenvectors.

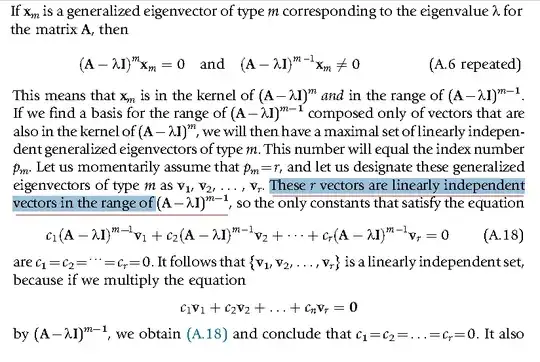

Old question/Optional Background info that can be skipped: This is from Bronson et al Linear Algebra p. 396 explaining the Jordan Canonical Form. Aside from lots of typos from copying and pasting latex in the examples of this third edition text, the explanation of Jordan Canonical Form from this book finally makes the concept make sense to me excluding one part: Why are the generalized eigenvectors of type m linearly independent from each other? At first I thought the authors were using the fact that regular eigenvectors of different eigenvalues are linearly independent, but then I remembered that isn't true for eigenvectors used to form the jordan canonical form, the allowance of repeat eigenvalues of any (not just hermitian) matrices being the point. Here is the text quoted:

Please note, I understand why the eigenvectors from the same chain are linearly independent, and invariant with each other. My question is why are the eigenvectors of type m at one level of separate chains linearly independent from each other, as they can and are propagated from the same repeated eigenvalue sometimes and therefore the equation using the eigenvectors of type m converted to regular eigenvectors doesn't prove that they independent by the same reasoning as eigenvectors of distinct eigenvalues.

Bonus points: I'm interested in a reference request, link, or short intuition for the immediately preceding unexplained theorem in the text pg.395 and surprisingly didn't return a relevant proof on stack exchange as I worded it:

The following result, the proof of which is beyond the scope of this book, summarizes the relevant theory.

**Theorem 7: **Every n$\times$n matrix possesses a canonical basis in $\Re^n$

Here canonical basis means obviously Jordan Normal Form.