We can do this by using the definition of the cross product as an bilinear map $V \times V \to V$. We also take as a basic property that it is orthogonal to each of its arguments,

$$ a \cdot (a \times b) = b \cdot (a \times b) = 0 \tag{Orth} $$

We need another property to fix the scaling; the easiest one is that the magnitude of $a \times b$ is the area of the parallelogram spanned by $a$ and $b$. This effectively means that

$$ (a \times b) \cdot (a \times b) = (a \cdot a)(b \cdot b) - (a \cdot b)^2 \tag{Area} $$

(If this looks opaque, you might prefer to start with perpendicular $a$ and $b$, then extending with bilinearity.)

(A) and $v \cdot v = 0 \implies v = 0 $ force that

$$a \times a = 0, \tag{Alt} $$

(alterating) and expanding $(a+b) \times (a+b)$ and using this implies that

$$a \times b = - b \times a : \tag{AntiSym} $$

the cross product must be antisymmetric.

The scalar triple product is $[a,b,c] = a \cdot (b \times c)$. It is trilinear since both products are bilinear, and it is also zero when two arguments are equal, by (Orth) and (Alt):

$$ [a,a,b] = [a,b,a] = [b,a,a] = 0 $$

Using linearity on $[a+b,a+b,c]$ implies that further $[a,b,c] = -[b,a,c]$, and combining this with the inherited antisymmetry in the last two arguments from $\times$, we find that

$$ [a,b,c] = [b,c,a] = [c,a,b] = -[b,a,c] = -[a,c,b] = -[c,b,a] , $$

and in particular,

$$ a \cdot (b \times c) = (a \times b) \cdot c : $$

we can swap the position of the dot and the cross.

We can now derive a restricted form of the triple product identity: using linearity on (Area), we have

$$ 0 = ((a + b) \times c) \cdot ((a+b) \times c) + ((a+b) \cdot c)^2 - (a+b) \cdot (a+b))(c \cdot c) \\

= \dotsb = 0 + 0 + (a \times c) \cdot (b \times c) + (a \cdot c)(b \cdot c) - (a \cdot b)(c \cdot c) . $$

Switching the dot and the cross in the first term, we find that

$$ 0 = a \cdot ( c \times (b \times c) + (b \cdot c)c - (c \cdot c)b ) $$

But $a$ is arbitrary here, so

$$ c \times (c \times b) = (b \cdot c)c - (c \cdot c)b . \tag{R} $$

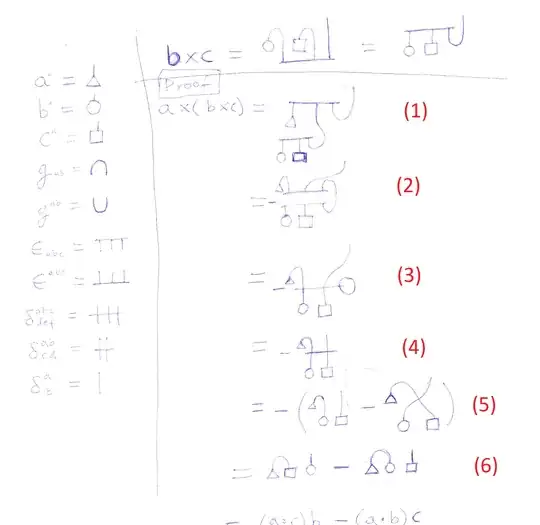

We can derive a couple more identities that are true in general, but now we can cheat and use that we are in three dimensions, so we can expand $a = \lambda b + \mu c + \nu (b \times c)$. Then using linearity, (R) and linearity again,

$$ a \times (b \times c) = \lambda (b \times (b \times c)) + \mu ( c \times b \times c ) \\ = \lambda( (c \cdot b)b - (b \cdot b)c ) + \mu ( (c \cdot c)b - (b \cdot c)c ) \\

= ((\lambda b + \mu c) \cdot c)b - ((\lambda b + \mu c) \cdot c)b $$

Finally, we can add the $\nu (b \times c)$ part back into both dot products, since it is orthogonal to $b$ and $c$, which gives

$$ a \times (b \times c) = (a \cdot c)b - (a \cdot b)c $$

as expected.