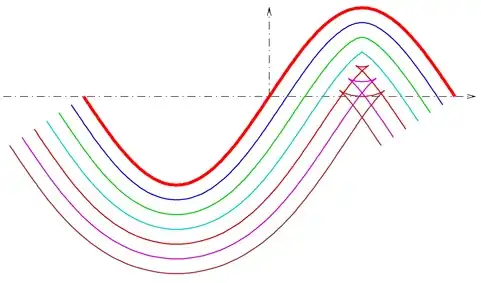

When you compute offset curves (also called parallel curves), by offsetting a fixed distance along the normals to a given curve, the resulting curve self-intersects when the offset distance exceeds the radius of curvature (see below).

I am looking for a practical way to detect and remove the inner "pockets" that appear. The output should be a continuous curve with the self-intersection replaced by a corner point.

My curves are actually cubic arcs, but I work with flattening and discrete points, so one can see the curve as a smooth polyline. In a variant of the question, the offset is also varying along the curve.

Update:

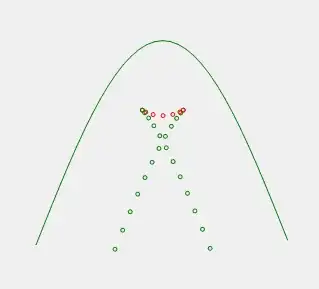

There is a straightforward way to detect the cusps: they arise where the radius of curvature equals the offset distance. In the discrete setting, the osculating circle can be approximated by the circumscribing circle of three consecutive vertices.

In the figure, you see offset vertices, which are in red when the estimated curvature is smaller than the offset. This principle allows to find evidence of self-intersections.

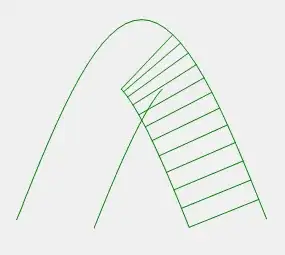

I suspect that the "ladder" formed by the initial polyline and the corresponding offsetted points can help find the intersection efficiently.