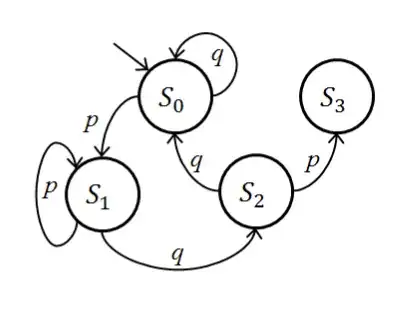

I am tossing a coin with probability $p$ of heads (H) and $q=1-p$ of tails (T). What is the expected number of tosses until I get HTH?

The book that I am reading suggests the following solution: consider the event that HTH does not occur in $n$ tosses, and in addition the next three tosses give HTH. If we look at the combinations of the $(n-1)$-th and $n$-th tosses, followed by HTH, we can deduce that: \begin{equation} \mathbb{P}(Y>n)p^2q=\mathbb{P}(Y=n+1)pq+\mathbb{P}(Y=n+3),\quad n\geq 2 \end{equation} As suggested, it is possible to sum this equation over $n$ in order to obtain that $\mathbb{E}(Y)=(pq+1)/(p^2q)$. However, I tried to do the sum and manipulate it in different ways but I could not obtain anything that leads me to this conclusion.