My linear algebra foundation is really weak, so please bear with me. I am working with a vector and a kernel matrix. The kernel matrix is 500x500, call it K, and the input is a 500x1 vector, call it a.

I can calculate the 500x1 vector, t, by multiplying the kernel with the input: t = Ka. Now, suppose I'm given only K and t. I'm attempting to use those to find a. I suppose to put it another way, if K maps a from the input space to the feature space containing t, I am trying to find another kernel matrix such that you map t back to the input space.

At first, I thought of finding the inverse of the kernel matrix, but I can't get the inverse of the kernel matrix in MATLAB. I think it might be because the upper right half of the kernel matrix is triangular, filled with all 0s. I then thought that I could break down the matrix into two smaller matrices with non-negative matrix factorization (NNMF), but that didn't work out at all with MATLAB. Precision was off, and when I multiplied the two resulting matrices back to approximate the original kernel, it showed 'INF' for all entries.

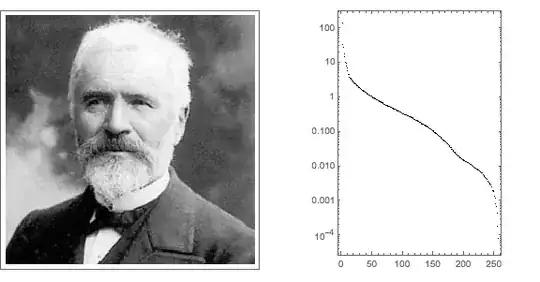

Right now, I used SVD to get the singular values of the kernel, and using U,S,V (again, MATLAB), I got the inverse of the kernel matrix. However, when I multiplied K inverse with t, the result did not 100% match up with t. I'd say that the first 300 entries were really close to each other with the remaining 200 entries being wildly off by a large magnitude.

I know that this is a really vague description, but my knowledge of linear algebra is very weak, and I'm not sure what else I can do. Is there a way to map from the feature space to the input space? Is SVD the way to go?