When it comes to definitions, I will be very strict. Most textbooks tend to define differential of a function/variable like this:

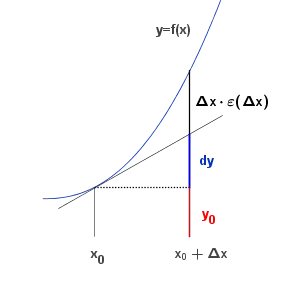

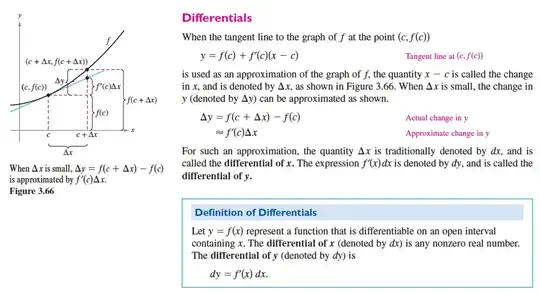

Let $f(x)$ be a differentiable function. By assuming that changes in $x$ are small enough, we can say: $$\Delta f(x)\approx {f}'(x)\Delta x$$ Where $\Delta f(x)$ is the changes in the value of function. Now we define differential of $f(x)$ as follows: $$\mathrm{d}f(x):= {f}'(x)\mathrm{d} x$$ Where $\mathrm{d} f(x)$ is the differential of $f(x)$ and $\mathrm{d} x$ is the differential of $x$.

What bothers me is this definition is completely circular. I mean we are defining differential by differential itself. Can we define differential more precisely and rigorously?

P.S. Is it possible to define differential simply as the limit of a difference as the difference approaches zero?: $$\mathrm{d}x= \lim_{\Delta x \to 0}\Delta x$$ Thank you in advance.

EDIT:

I still think I didn't catch the best answer. I prefer the answer to be in the context of "Calculus" or "Analysis" rather than the "Theory of Differential forms". And again I don't want a circular definition. I think it is possible to define "Differential" with the use of "Limits" in some way. Thank you in advance.

EDIT 2 (Answer to "Mikhail Katz"'s comment):

the account I gave in terms of the hyperreal number system which contains infinitesimals seems to respond to your concerns. I would be happy to elaborate if anything seems unclear. – Mikhail Katz

Thank you for your help. I have two issues:

First of all we define differential as $\mathrm{d} f(x)=f'(x)\mathrm{d} x$ then we deceive ourselves that $\mathrm{d} x$ is nothing but another representation of $\Delta x$ and then without clarifying the reason, we indeed treat $\mathrm{d} x$ as the differential of the variable $x$ and then we write the derivative of $f(x)$ as the ratio of $\mathrm{d} f(x)$ to $\mathrm{d} x$. So we literally (and also by stealthily screwing ourselves) defined "Differential" by another differential and it is circular.

Secondly (at least I think) it could be possible to define differential without having any knowledge of the notion of derivative. So we can define "Derivative" and "Differential" independently and then deduce that the relation $f'{(x)}=\frac{\mathrm{d} f(x)}{\mathrm{d} x}$ is just a natural result of their definitions (using possibly the notion of limits) and is not related to the definition itself.

I know the relation $\mathrm{d} f(x)=f'(x)\mathrm{d} x$ always works and it will always give us a way to calculate differentials. But I (as an strictly axiomaticist person) couldn't accept it as a definition of Differential.

EDIT 3:

Answer to comments:

I am not aware of any textbook defining differentials like this. What kind of textbooks have you been reading? – Najib Idrissi

which textbooks? – m_t_

Check "Calculus and Analytic Geometry", "Thomas-Finney", 9th edition, page 251

and "Calculus: Early Transcendentals", "Stewart", 8th edition, page 254

They literally defined differential by another differential.