Suppose that $Y_1,...,Y_n$ is a set of random variables that satisfy the model $Y_i=\theta x_i+e_i$, $i=1,...,n$ where $x_1,...,x_n$ are fixed (non-random) known constants and $e_1,...,e_n$ are independent and identically distributed $N(0,\sigma^2)$ random variables where $\sigma^2$ is unknown.

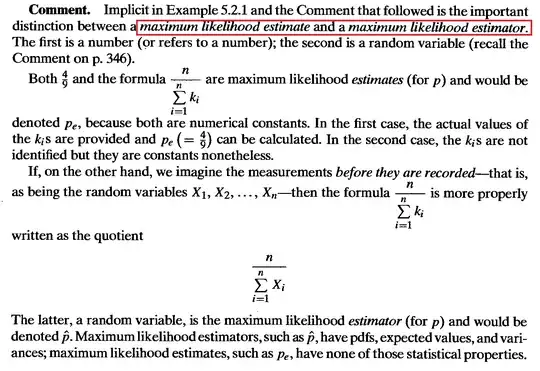

a. Find the maximum likelihood estimator $\theta$ and find its variance

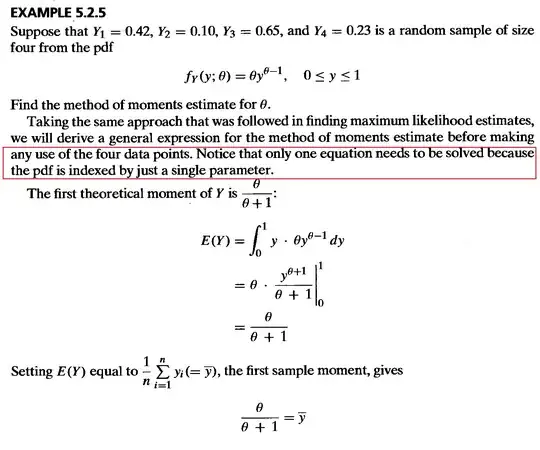

b. Find the method of moment estimator $\theta$ and calculate its variance

What I have so far.

a. First, given the pdf for e which is $N(0,\sigma^2)$ I used the change of variables technique to get $f_y(Y)=\frac{1}{\sqrt {2\pi}\sigma}e^{\frac{-y-\theta x_i}{2\sigma^2}}|1|$. From the maximum likelihood function I got $L(\theta)=0$ so I inferred that $\theta=y_{\max}$. Since that value made $L(\theta)$ smallest. How to I proceed to find the variance?

b. I found $E(X)$ and $E(X^2)$ however they are both zero. Does the MOM estimator exist?