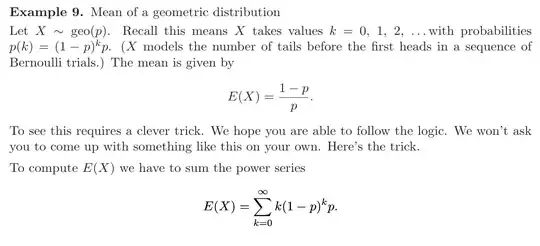

The question is about an example from Introduction to Probability and Statistics at MIT OpenCourseWare, specifically from this document.

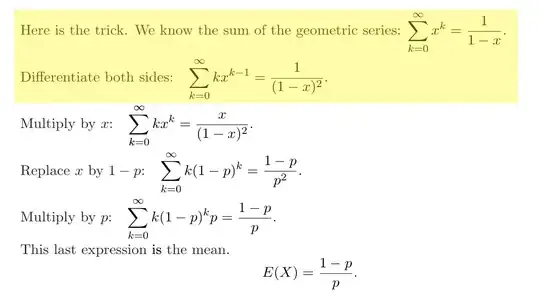

My question is, why can we do the highlighted transformation? The example is about geometric distribution, so it makes sense with $p \in (0, 1)$ and later we replace $x$ with $1 - p$, so it follows that only $x \in (0, 1)$ interests us.

I tried looking up some theorems about this on the internet and in Michael Spivak - Calculus.

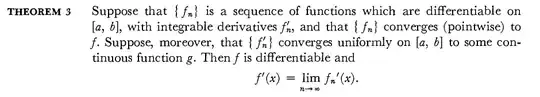

Here is what Spivak says about this in Chapter 23:

This does not seem applicable to my problem, because:

- I have an open interval, but I can probably figure out a way around that.

- This is the more important point. I don't know if $\sum_{k=0}^{\infty} k x^{k-1}$ converges uniformly to anything, let alone if it converges to a continuous function. If I knew that, I would probably know what it converges to exactly and thus I would not need to use the sum rule.

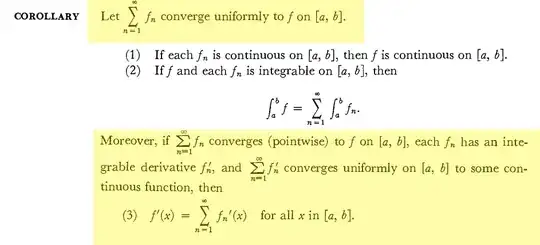

Now about this answer. It contains the following theorem:

THEOREM 1 If the series $\sum u_k(x)$ composed of functions with continuous derivates on $[a,b]$ converges to a sum function $s(x)$ and the series $$\sum u'_k(x)$$ composed of this derivatives is majorant on $[a,b]$, then $$s'(x)=\sum u'_k(x)$$

I can't apply it here because:

- I have open interval, not close interval.

- $\sum_{k=0}^{\infty} k x^{k-1}$ is most probably not majorant on $(0, 1)$.

- It is most probably true, that $\forall a \in (0, 1)$ this series of derivatives is majorant on $(0, a]$, but I can't prove it.

TLDR: why can we use sum rule for derivating the series in first highlighted excerpt?