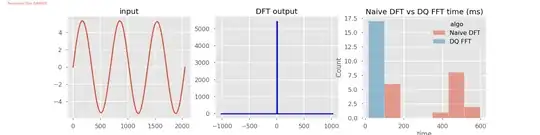

I feel that all the theoretical elements are present in the others' responses, but not the pragmatic reason why FFT reduces the complexity by a significant factor (and hence the popularity of this algorithm among other things).

Applying the DFT amounts to multiplying a matrix to a vector (your original, sampled, signal, for example, each entry of your vector being one sample). That matrix is the Fourier matrix. Say you have $2n$ samples, the matrix to consider is then (up to a constant factor)

$(F_{2n})_{jk} = \exp(i\pi \times jk/n)$

where $i=\sqrt{-1}$. These are roots of unity.

Now the magic happens because of a so-called butterfly property of these matrices, you can decompose them in a product of matrices: each of which is cheap to apply to a vector (cf. below)

For example, take $F_4$, it is given (up to a factor) by:

$$ F_4 \propto \left(\begin{array}{cccc} 1 & 1 & 1 & 1\\ 1 & i & i^2 & i^3 \\ 1 & i^2 & i^4 & i^6 \\ 1 & i^3 & i^6 & i^9 \end{array} \right) \propto \left(\begin{array}{cc|cc} 1 & 0 & 1 & 0\\ 0 & 1 & 0 & i\\\hline 1 & 0 & -1 & 0\\ 0 & 1 & 0 & -i \end{array} \right)

\left(\begin{array}{cc|cc} 1 & 1 & 0 & 0\\ 1 & i^2 & 0 & 0\\\hline 0 & 0 & 1 & 1\\ 0 & 0 & 1 & i^2 \end{array} \right)

\left(\begin{array}{cccc} 1 & 0 & 0 & 0\\ 0 & 0 & 1 & 0\\ 0 & 1 & 0 & 0\\ 0 & 0 & 0 & 1 \end{array} \right) $$

Let's look at the first two matrices: their structure is very typical, (you could show by recursion how this generalizes) but more about this later. What matters for us now is that there are only 2 non-zero entries per line. This means that when considering the application of one of those matrices to a vector, the number of operations to do is a modest constant times $n$. The number of matrices in the decomposition, on the other hand, grows like $\log n$. So overall the complexity scales like $n\times \log n$ where an unstructured, basic, matrix-vector product would scale like $n^2$.

(To clarify this point: starting from the right, you apply one matrix to one vector, this costs $O(n)$, then you apply a matrix to the resulting vector, again $O(n)$, since there are $O(\log n)$ matrices, the overall complexity is $O(n\log n)$ where $O$ signifies (roughly) "of the order of", for more about big-O notation, check out the wikipedia page (1))

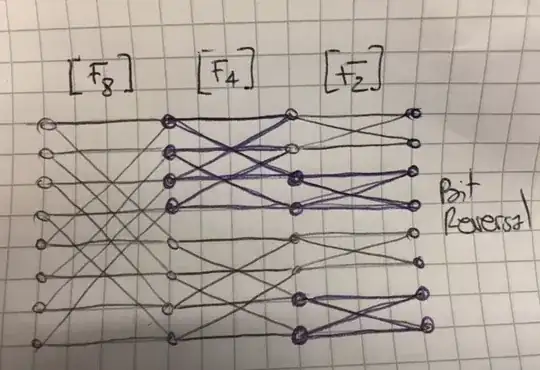

Finally, the last matrix in the decomposition is a bit-reversal matrix. Not going in the details it can be computed extremely efficiently. (But even naively, the cost is obviously not more than linear in $n$).

Provided I haven't lost you here, let's generalize a bit: the matrix $F_{2n}$ has the following form (this is not hard to check)

$$ F_{2n} \propto

\left(\begin{array}{cc} I_n & A_n \\ I_n & -A_n \end{array}\right)

\left(\begin{array}{cc} F_n & 0 \\ 0 & F_n \end{array}\right) B_{2n} $$

where $B_{2n}$ is a bit-reversal matrix of appropriate dimensions, $F_n$ is the DFT matrix of order $n$, and hence this matrix can itself be expressed as a product etc..

$I_n$ is the identity matrix of size $n\times n$ and $A_n$ is a diagonal matrix with the powers of $i$ from $0$ to $n-1$.

By the way you can now observe that it's desirable to have the size of your original sample-vector be a power of $2$. If it is not the case, it is cheaper to pad your vector with zeros (this is actually called zero padding)

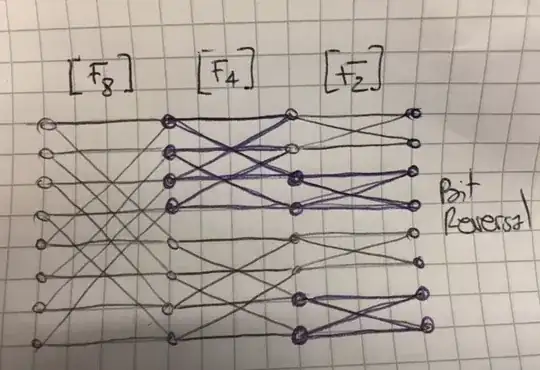

The last thing to clarify is the butterfly idea (see also [2]). For this, nothing better than a small drawing (sorry I didn't want to do this in Tikz, if there's a hero out there...). If you represent the vectors after each stage by dots and you connect the dots from the original vector to the vector after the matrix has been applied, you get this nice diagram (ignoring the bit reversal)

I've tried to make clear on the diagram that the first level corresponds to 4 applications of $F_2$, the next level to 2 applications of $F_4$ etc. Just to make it clearer, starting from the right it means that the first matrix multiplication after the bit-reversal will

- for the first entry, need to look at the first and the second entry of the original vector (corresponding to a non-zero entries at positions (1,1) and (1,2) in that level's matrix)

- for the next entry, need to look at the first and the second entry (this makes the first block in the "F2" column (corresponding to non-zero entries at positions (2,1) and (2,2))

etc.. Further, observe that, at each level, we can consider blocks of entries independently. Again this leads to further optimization that can make the FFT very very efficient.

Ps: I've ignored all multiplication constants since that's an O(1) computation, it's easy to check what they are and it will depend on conventions you're using.

- https://en.wikipedia.org/wiki/Big_O_notation

- https://en.wikipedia.org/wiki/Butterfly_diagram

see also: https://en.wikipedia.org/wiki/Cooley%E2%80%93Tukey_FFT_algorithm#Pseudocode

where some pseudocode is available illustrating how you would code the FFT recursively.

Another good resource to see how to code the FFT from scratch: https://jakevdp.github.io/blog/2013/08/28/understanding-the-fft/