I will try to start from the simplest case possible and then build up to your situation, in order to hopefully develop some intuition for the notion of convolution.

Convolution essentially generalizes the process of calculating the coefficients of the product of two polynomials.

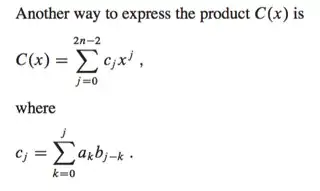

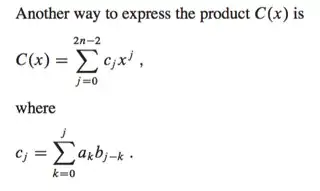

See for example here: Multiplying polynomial coefficients. This also comes up in the context of the Discrete Fourier Transform. If we have $C(x)=A(x)B(x)$, with $A(x), B(x)$ polynomials, we have:  The image is from Cormen et al, Introduction to Algorithms, p. 899.

The image is from Cormen et al, Introduction to Algorithms, p. 899.

This type of operation also becomes necessary when calculating the probability distributions of discrete random variables. In fact, this type of formula allows us to prove that the sum of independent Bernoulli random variables is binomially distributed.

If we want to calculate the probability distribution of two discrete random variables with infinite support (for example, the Poisson distribution, which can take infinitely many possible values with positive probability), then we need to use the Cauchy product to calculate the convolution. This just generalizes the formula given above for infinite series. The following image is from Wikipedia:

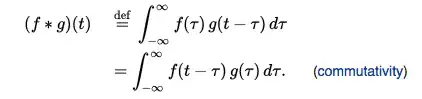

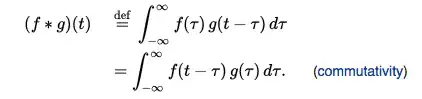

Now as you probably already know, the (Riemann) integral is the limit of infinite series, hence it should not be surprising that this convolution formula for infinite series also generalizes to a convolution formula for integrals. This is what you are working with for probability distributions of continuous random variables, as in your problem above.

Here is the formula (from Wikipedia again):

$U=Y-X = Y + (-X)$, so therefore $$f_U(u) =(f_Y * f_{-X})(u) = \int_{-\infty}^{\infty} f_Y(t) f_{-X}(u-t) \mathrm{d}t$$

Right now you only have the joint density, so in order to use the convolution formula, we have to calculate the marginal densities from the joint density. This will lead to us having a double integral. See for example here: How do I find the marginal probability density function of 2 continuous random variables? or Help understanding convolutions for probability?

More specifically (see e.g. here), $$f_Y(y) = \int_{-\infty}^{\infty}f_{XY}(x,y)dx$$ $$f_X(x) = \int_{-\infty}^{\infty} f_{XY}(x,y) dy$$ So now we have the (marginal) densities for $X$ and $Y$, but what we need are the densities for $-X$ and $Y$, so we need to calculate the density of $-X$ based on the density for $X$, which is done as follows (for $X$ continuous such that $\mathbb{P}(X=c)=0$ for any $c \in \mathbb{R}$): $$\mathbb{P}(a \le X < b)= \mathbb{P}(-b < X \le -a) \\ \implies \mathbb{P}(a \le -X < b) = \int_{-b}^{-a} f_X(x)dx = \int_b^a [f_X(-x)](-1) \mathrm{d}x=\int_a^b f_X(-x) \mathrm{d}x$$

In other words, $$f_{-X}(x)=f_X(-x) = \int_{-\infty}^{\infty} f(-x,y)\mathrm{d}y.$$

So finally, $$f_U(u)= \int_{-\infty}^{\infty} \left[\int_{-\infty}^{\infty} f_{XY}(x,t)\mathrm{d}x\right] \left[\int_{-\infty}^{\infty} f_{XY}(-(u-t),y)\mathrm{d}y \right]\mathrm{d}t = \int_{-\infty}^{\infty} \left[\int_{-\infty}^{\infty} f_{XY}(x,u-t)\mathrm{d}x \right] \left[ \int_{-\infty}^{\infty} f_{XY}(-t,y)\mathrm{d}y \right]\mathrm{d}t$$ The second version might be easier to calculate; both are equivalent.