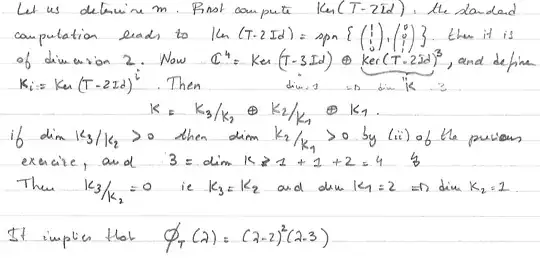

Here's another approach, not sure if compatible with what you've learned so far. If not maybe you can look back later.

You will need Cayley-Hamilton theorem and maybe something more. Background: The minimal polynomial divides the characteristic polynomial.

Cayley-Hamilton theorem is the result that every matrix fulfils it's own characteristic polynomial. Then the factors of the minimal polynomial is a subset of the factors in the characteristic polynomial. In fact it is the minimal degree polynomial ( therefore the name, I'd guess ) that fulfills the equation.

Knowing this we can calculate the vectorization of the first few $A^k$ and then solve an equation system where we equal it to the vectorization of the unit matrix. The lowest possible such $k$ that gives 0 error will have the coefficients of our minimal polynomial!

In GNU Octave or matlab this would do:

c = [vec(A),vec(A^2)]\vec(eye(4)); % first try, we know that it needs to be

% degree >= 2 because otherwise A would be 0 or a multiple of I, which it isn't.

Subsequent trials would correspond to successively adding a new column $\text{vec}(A^k)$ until

sum(abs([vec(A),vec(A^2)]*c-vec(eye(4))))

or some other error measure becomes sufficiently small with regard to our numerical precision.

Note that we don't need to find any eigenvalues or multiply together stuff or factor anything. Just a few matrix multiplications followed by linear regression.