Why does the "$\times$" used in arithmetic change to a "$\cdot$" as we progress through education? The symbol seems to only be ambiguous because of the variable $x$; however, we wouldn't have chosen the variable $x$ unless we were already removing $\times$ as the symbol for multiplication. So why do we? I am very curious. It seems like $\times$ is already quite sufficient as a descriptive symbol.

-

27Beats me. I have a feeling the powers that be that determined how we teach arithmetic to children and those who decide conventions in algebra refuse to deign themselves to ever sit in the same room. But it makes sense sort of. Pruducts become so basic we drop symbols for them altogether and write xy with no symbol at all. But for children we want something huge and unmistakable. Like a giant X – fleablood Jun 30 '16 at 04:30

-

29TBH, while I know that × signifies multiplication, I have never learned it that way in school. The multiplication symbol always was the dot ⋅ . So there obviously exist different conventions when it comes to teaching. – Chieron Jun 30 '16 at 06:17

-

1Two things to note: (1) different cultures use different symbols (and indeed different writing systems for their languages), (2) people doing different things in math use different symbols for the same thing, out of convenience or tradition. Cf. wiki summary – Kimball Jun 30 '16 at 13:38

-

3For historical references, you can see Earliest Uses of Symbols of Operation: MULTIPLICATION SYMBOLS. – Mauro ALLEGRANZA Jun 30 '16 at 19:37

-

I would probably look at descartes for $x$ being used as a variable as this answer suggests http://math.stackexchange.com/a/2938/128967 – snulty Jun 30 '16 at 20:16

-

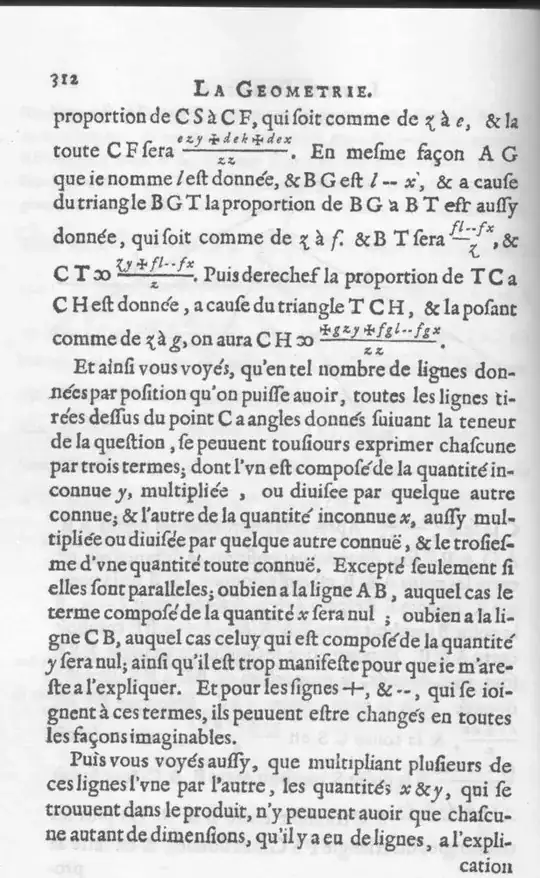

@snulty - Yes; in his La Geometrie (1637) Descartes use $x$ (as well as $y,z$) for unknown, but he uses juxtaposition for multiply. – Mauro ALLEGRANZA Jul 01 '16 at 10:24

-

It may be interesting to note that, in the formal study of programming languages, it is common to use $\times$ for an analog of multiplication, while I have never seen a $\cdot$ used for that purpose. – YawarRaza7349 Jul 02 '16 at 22:37

-

2I was referring to a different notion of "multiplication": the product type; see the article I linked to for a description of it. In programming language research papers, the $\times$ symbol is the conventional notation used for the product type. – YawarRaza7349 Jul 03 '16 at 00:38

-

It's simply easier to write. You need a clear notation, not easily confused with other symbols for reporting to a wider audience; when thinking to yourself and dealing with people who are more knowledgeable, your audience has more "error correction capability". An analogy: back in the days when I did had to do hand calculations for real engineering problems, I would use a comma for the decimal marker even though the culture around me uses the dot. I found that the dot was waaay too easy to lose on paper - it conveys so much information (power of ten) and yet is easily confused for dust! – Selene Routley Jul 13 '17 at 01:23

-

I feel guilty having made this question all those years ago, because I never actually wanted an answer... I just asked it because I thought it would be popular. I wonder now if I should dissociate the rep from my account. – user64742 Mar 02 '21 at 17:21

9 Answers

As @DavidRicherby implies out in a comment below, one should ideally distinguish carefully the history of the dot notation from the possible reasons for keeping it, retaining it, or modifying its usage. Unfortunately although I am qualified by age (66) to comment on the last 50 years, I am otherwise ill-qualified to deal with the history (for which see when and by whom and also the answers by @hjhjhj57 and @RobertSoupe). So what follows may sometimes seem to mix history and reasons and at times get the history wrong. It is intended as one individual's take on reasons. Note also that having only lived in the USA for about 3 years (2 years LA, and 6 months each in NY and Colorado), I am much more familiar with the UK scene than the USA scene, and know almost nothing about other countries.

There are multiple reasons. Perhaps the most important is a desire to make the notation as concise as possible. The change is not really from $a\times b$ to $a\cdot b$. It is from $a\times b$ to $ab$. In many undergraduate algebra books, and at the research and journal level, the "multiplication" operation is just denoted by juxtaposition. But then that is also true in some maybe most schools for teenagers. Glancing at the 2014 Core Maths papers from one of the leading UK exam boards for the "A-levels" (the final exams for most pupils), they seem to use juxtaposition exclusively. On the other hand papers for GCSE maths (typically taken age 16) seem to use $246\times10$.

This is also linked to a desire for speed. There are significantly less keystrokes in $ab$ than in either $a\times b$ or $a\cdot b$ if you are using LaTeX. Perhaps more important, being more concise, juxtaposition is easier to read. But as @DavidRicherby points out in a much upvoted comment below, LaTeX has come late to the party, so it may have a minor role in maintaining the status quo, but could not have helped to bring it about.

Another reason is avoiding ambiguity. For example $3^25$ is unambiguous because the exponent separates the $3$ and $5$. But in LaTeX if you try to write $3\cdot5^2$ by juxtaposition you have to insert a special space to get $3\ 5^2$ and the outcome is still not entirely satisfactory. But I may pay too much attention to such matters, because having published two books in the last two years, I wonder how anyone manages to combine writing math books with a full-time job, the work involved is horrendous!

Another reason may be that 3D vectors are often introduced early, and have two multiplication operations: the dot product and the cross product. So one is forced to use two different symbols to avoid ambiguity. Of course, one could avoid that by using the tensor subscript approach, and how all that is handled has a fashion element in it. For the last few decades for example, there has been a campaign to move us towards Clifford or "geometric algebras" (where the cross product is frowned on and the wedge product is key).

Note also that $a\cdot b$ often does not represent ordinary multiplication. Of course $3\cdot5$ almost always does, but as one moves through undergraduate work into graduate work $a\cdot b$ is increasingly used to represent operations other than the ordinary multiplication (of integers, reals etc).

As @Kundor correctly points out, the OP's real question could be seen as why teach $5\times 6$ in the first place? I have never tried to teach anyone younger than about 9. But I am fairly sure that trying to use juxtaposition when arithmetic is first introduced would be a non-starter. So the question becomes why not start with $5\cdot6$, instead of moving to it years later?

That seems to me a mixture of history and psychology. I want to keep away from the history if possible, but the psychology does not surprise me. Making sensible changes to familiar things is hugely difficult when large numbers of people are involved, particularly when it is completely unclear to them how the change will benefit them. I clearly remember the UK's move from the old "pounds, shillings and pence" (with 12 old pence to the shilling, 20 shillings to the pound). It required a massive campaign by the government. In that case it was obvious that a simple 100 new pence to the pound would be much easier, but few people wanted to switch, having got used to the old currency.

Another example is the difficulty we have had in the UK moving from fahrenheit to celsius for temperature. All our weather forecasts are now in celsius (or rather centigrade - the identical system with a different name), but it took years to get most people to accept it. The old system was bizarre (bp of water 212, fp 32), yet I believe it is still used in the USA!

Or take miles. The SI unit is km. But there seems no prospect of the UK changing all its road signs to km for the foreseeable future. Remember this is a country where we drive on the wrong side of the road. When I was commuting backwards and forwards to LA and picking up rental cars at LAX and my own car at LHR, the only way I could find to remember it, was that I had to drive so that I was as near the centre of the road as possible. Mercifully I never got the wrong kind of car.

So changing the status quo is tough. Time to make an obvious point: MSE is read in many countries, and practices vary widely, often even within countries. @Chieron 's much upvoted comment under the question notes that some schools never use $3\times4$, but start with $3\cdot4$.

Similarly, I have tended to focus above on differences relevant to teenagers and undergraduates, but @BenC 's answer makes the excellent and easily overlooked point about potential and actual confusion between the centre dot and the decimal point.

Again, @RobertSoupe (in his answer) makes the excellent point (which I managed to overlook entirely) about potential confusion between times $\times$ and the variable $x$ when children move on from learning tables to slightly more advanced maths. See also the comment by @user21820 below.

I would also draw attention to some comments by @snulty. Under the question he and @MauroALLEGRANZA note that Descartes used $x$ for unknown and juxtaposition for multiply (which shows how tricky historical discussion can be unless you are well briefed)! I also highly recommend snulty's answer. I am ill-qualified to comment on the truth, but it certainly sounds highly plausible.

Wikimedia Rene Descartes after Frans Hals

A final observation. One of the simultaneously delightful and frustrating aspects of the academic world is that diktats do not work. To persuade people to change their usage can take generations. Sometimes (as with the great Classical Statistics debacle) one has to wait more than a century to get important changes widely accepted. Notation is particularly tricky. New areas of maths are constantly emerging and people are constantly hijacking old symbols for new uses, so that at any moment notation often appears inconsistent across the whole field. It is hard to see what can be done to change that. So $a\times b$ can still mean ordinary multiplication, but sometimes it means vector product, even though there is no boldface or under- or over-lining to make clear that $a,b$ are vectors.

So context is always king.

I could not resist adding this (a facsimile of a page of Descartes (1596-1650) ):

-

15People had been using juxtaposition for multiplication for a long, long time before TeX or LaTeX came along. The fact that

a\times bis seven more keystrokes thanabcannot possibly be the reason. – David Richerby Jun 30 '16 at 11:41 -

1@DavidRicherby perhaps an easier way would just to simply say scientists and mathematicians have a habit of being inherently lazy, and thus go to great lengths to invent methods and notations to shorten things, which is ironic and maybe even paradoxical in the fact that they have to do more work to invent the new notation in order the shorten the work but that's just how things work in the world. – Xylius Jun 30 '16 at 11:52

-

2In university maths, $a\cdot b$ often means an ordinary multiplication of two numbers $a$ and $b$. – Karlo Jun 30 '16 at 12:16

-

3Note: juxtaposition is already used for multplication in secondary school. – Kimball Jun 30 '16 at 13:33

-

This answer goes into the reasons for preferring juxtaposition, with dots when necessary, but then why teach everyone to use $\times$ in the first place? I think that's the heart of the question. – Nick Matteo Jun 30 '16 at 15:16

-

10My take on why young children are taught with "$\times$" is because if you use "$\cdot$" you might well get children wondering why on earth "$2\cdot3$" is different from "$2.3$", or simply mixing them up due to not writing them nicely formatted, or better still because they actually write "$2!·!3$" to mean the decimal, with the decimal point being in the middle! In fact, when I was young I wrote decimals that way, but I no longer remember who taught me that. Out of habit, I still write decimals that way... – user21820 Jul 01 '16 at 12:45

-

"laziness" is too pejorative. It's a virtue to make things easy to read, and in some contexts, the concision of "a.b" or "ab" is supremely readable, and hence is a better notation than "a x b". – Jonathan Hartley Jul 01 '16 at 15:49

-

1

-

1@Typhon: Different education systems may do things differently. In mine, it's quite uncommon to see "·" used for multiplication in high-school, and if you write "2·3" you may be misunderstood. In university "×" shows up less and less, but is simply replaced by juxtaposition and not "·". – user21820 Jul 13 '17 at 03:53

There is also an ambiguity between a decimal fraction with a dot, as in $3.5^2$, and multiplication with a centre dot, as in $3\cdot5^2$, particularly if the latter doesn't have spacing around the dot to give context, as in $3\!\cdot\!5^2$.

In fact some textbooks use a centre dot for decimal fractions, for example Nelkon and Parker's Advanced Level Physics (sixth edition published in the UK in 1987, at least, which uses $\times$ for multiplication).

- 339

-

5And some people are being lazy and use

.for multiplication. I've even had lectures in which we needed to decipher things like $3.4.10^5.y$. Horrible! – leftaroundabout Jun 30 '16 at 13:46 -

-

24Thinking about my kids' (7 & 9) handwriting, the difference between decimal dots & multiplication dots would be nigh impossible to discern, making dot notation for multiplication in elementary ages a risky endeavor. – Ghillie Dhu Jun 30 '16 at 17:57

-

4The reason for both usages is that the convention of using a lowered dot as the radix (decimal point) is not universal. In some countries, a centered dot is used as radix and lowered dot for multiplication. If you grew up in these countries, the notation would be natural, and our use seems strange. I'm guessing that the authors of your text are not American. – Paul Sinclair Jun 30 '16 at 23:05

-

2@PaulSinclair Wikipedia confirms your claim. Moreover, in some countries the convention for the location of the radix/decimal point has changed over time. In the UK the convention was for the point to be vertically aligned centrally until fairly recently, as any old textbook will show.(I'm not sure when the switch occurred; checking 3 older books on my shelf, it was mid-aligned in a 1920s book and 1950s book but lowered in a 1970s book. I heard someone say that they were taught to mid-align back in the day, so it changed in living memory.) – Silverfish Jul 01 '16 at 00:33

-

@PaulSinclair Yes, it was published in the UK and the authors both taught in the UK. – Ben C Jul 01 '16 at 08:10

-

@Silverfish: Aha so now I know where whoever taught me got the centre decimal point notation from! – user21820 Jul 01 '16 at 12:49

This is primarily done to emphasize different multiplication operations in terms of vector and multidimensional calculus. In particular, this is to emphasize that the dot product $\cdot$ is mechanically different from the cross product $\times$, although in operations on objects of one dimension, they are virtually the same.

- 249

-

But why was the arithmetic cross product simply removed? It seems weird to teach it in low grade levels and then all lf sudden switch to dot for notation... – user64742 Jun 30 '16 at 04:21

-

4@TheGreatDuck: It mainly does not switch to $\cdot$, as in $a\cdot b$, but to concatenation, as in $ab$. – André Nicolas Jun 30 '16 at 04:25

-

@AndréNicolas not for things like $5 \cdot 4$. Writing 54 would be very misleading, – user64742 Jun 30 '16 at 04:27

-

1@TheGreatDuck: For arithmetic, certainly, though often one writes $(5)(4)$ even though it is longer. – André Nicolas Jun 30 '16 at 04:28

-

4I don't buy this answer. In France we use $\wedge$ for the "cross" product, and yet children are still taught multiplication is $\times$ whereas in higher education is becomes just juxtaposition or $\cdot$. – Najib Idrissi Jun 30 '16 at 08:23

-

@NajibIdrissi Every nation has it's own teaching history. I was tauht that $\cdot$ and period are symbols for multiplication and dot product. Later when cross product was introduced to us, the $\times$ symbol was introduced as well. There is not a problem with decimal numbers - we are using decimal comma... – Crowley Jun 30 '16 at 10:14

-

@Crowley I think you missed my point. I'm saying I don't believe it's the explanation, since we have the "dot" notation in France for multiplication even though there is not chance of confusing $\times$ with the cross product. – Najib Idrissi Jun 30 '16 at 10:41

-

@NajibIdrissi I don't buy the answer as well. I've never heard of the product convention You have mentioned. I think question is "why someone use $\times$ for multiplication?" http://math.stackexchange.com/questions/1180692/times-as-symbol-for-multiplication-how-common-is-this – Crowley Jun 30 '16 at 11:10

-

2@Heather What definition are you using for the cross product in one dimension? I can't think of any that make it the same as the dot product. – Oscar Cunningham Jun 30 '16 at 14:11

-

1@AndréNicolas could you give some examples where somebody writes $(5)(4)$ to denote five times four? (Or, something equivalent to this.) – quid Jun 30 '16 at 15:06

-

@quid I did encounter such notation here on MSE, and at first I had a hard time deciphering the meaning of a single object being parenthesized. I suppose this is a regional thing. – Hagen von Eitzen Jan 17 '17 at 07:19

-

@HagenvonEitzen in the interim there was a thread on MESE on this. I still think that the comment to which I replied is misleading, in that the context is expressly a more advanced one; and a main issue I had with it was the 'often' and specifically for multiplying just two numbers. – quid Jan 17 '17 at 11:10

The lowercase letter $x$ and the multiplication cross $\times$ (\times in TeX, × in HTML) are very different symbols. One can be used to represent a variable, as you have already halfway surmised, but it shouldn't be used to denote any kind of multiplication, whereas the other can be used to denote multiplication but should not be used to represent a variable. In some contexts you will see $\otimes$ used for extra clarity.

The big problem here is that these two symbols that have different etymologies and different uses look so much alike, and nowhere was this problem felt more acutely than during the early history of computer programming languages in the time of ASCII.

$x \times y$ is clear enough, at least for us with good enough eyes, but the multiplication cross is not in the ASCII character set, and so x x y would be hellishly ambiguous. And so the asterisk was co-opted for the multiplication operator, thus $x \times y$ becomes x * y, something you still see in Javascript and Mathematica. In C++ there are some operators that are actually words, but in general, in computer programming, the arithmetic operators are not alphanumeric symbols.

EDIT: Doing some research after posting this answer, I came across a page from Northeastern University on math symbols. William Oughtred, a 16th century mathematician, came up with a cross with vertical serifs as a multiplication symbol. Oughtred was rebuked by his now more famous contemporary Leibniz, who wrote:

"I do not like (the cross) as a symbol for multiplication, as it is easily confounded with x; .... often I simply relate two quantities by an interposed dot and indicate multiplication by ZC.LM."

This reinforces my point about how $x$ and $\times$ have different origins but are confusingly similar in appearance.

And by the way, don't ever use $\Sigma$ (uppercase Sigma) as a cheap way to write E when you want to give something a Greek flavor. That Greek letter was chosen as the summation operator because it is an S sound.

- 14,663

-

12Those last two sentences seem very random. Also, why did they ever use x conventionally as a variable if it could be ambiguous? – user64742 Jun 30 '16 at 05:19

-

3Remember that hindsight is 20/20. As these things developed, it was not always easy to see that a particular choice could cause problems down the road. And I've been wanting to rant about My Big Fat GrSSk Wedding 2, which I refuse to see solely on account of its title. – Robert Soupe Jun 30 '16 at 05:23

-

2

-

4The asterisk is used for multiplication in most imperative languages today. I can't think of one that doesn't. I'm not sure about functional languages, but the symbol is so entrenched now, I'd be more surprised if they deviate than if they don't. – jpmc26 Jun 30 '16 at 07:21

-

2Re: your last bit, if memory serves, the symbol $\int$ was then chosen in complete analogy to $\sum$, both being ways to represent the "s" in "sum". (And a reader of very old English books will occasionally see the "s" in words rendered as $\int$.) – J. M. ain't a mathematician Jun 30 '16 at 08:02

-

2@jpmc26 The programming language APL uses $\times$ for multiplication of two numbers. (I don't consider this an imperative language, but it is a language, allegedly still available, in which the asterisk has not replaced the $\times$ symbol.) – David K Jun 30 '16 at 13:05

-

2@jpmc26 Functional languages too. Standard ML, OCaml, Haskell, Common Lisp and Scheme all use the asterisk. – Doval Jun 30 '16 at 14:26

-

2

I'm looking for sources (see edit), but I would imagine when teaching children to count, add, multiply, you start with integers and addition is symbolised like $1+1=2$. Then you try to teach them that multiplication is short for lots of addition $3+3+3+3=4\times 3$ and $4+4+4=3\times 4$, and theres the obvious similarity between the symbols $\times$ and $+$.

At a later stage in school $3\cdot4 $ can look like $3.4$ as in $3\frac{4}{10}$, so I imagine this would be nice to avoid especially when you're also teaching kids to practice their handwriting, so they might not always put a dot in the exact place you tell them.

Then finally when you want to move onto more advanced things that $\cdot$ and $\times$ can stand for, even just algebra with a variable $x$, you might want to change to a better symbol.

I think the other answers take this point of view very well, so I won't mention anything about that.

Edit: On the irish curriculum at around third and fourth class they do multiplication and decimals at roughly the same time. It says to develop an understanding of multiplication of repeated addition and division as repeated subtraction (obviously in whole number cases).

- 4,355

It's because . stands for any binary operation which might look like 'multiplication' in some particular setting, or might be a substitute for multiplication. Hence, it is more general in nature.

- 739

-

But ⊕ and ⊗ are more commonly used for generic binary operators. – Scott - Слава Україні Jul 01 '16 at 06:04

-

They're more unwieldy, so . is used more often and then a.b is also written as just ab – DpS Jul 01 '16 at 08:39

I believe the reason must be mostly pedagogical:

- As other answers mention, a kid learning about decimals may get confused between a product and a decimal.

It may be easier for kids to think about $\times$ than $\cdot$, given that they already know the sum symbol and they are quite similar.

Kids usually multiply only small sets of numbers, and they do it to learn the technique. Once the technique has been mastered efficiency (and therefore practicality) is what matters most! Imagine having to write $\times$ everytime you multiply something?

For the historical part here are two references which put together confirm that Descartes was the one who first used $x$ as a variable and used the juxtaposition convention for multiplication: about convention for unknowns and about multiplicative notation. In fact, both of them cite Cajori's as their main reference.

-

Actually exactly the difference between addition and multiplication symbols may be helpful. In Germany multiplication is dot only, and division is a colon (i.e. two dots). Plus and minus are the same as everywhere. This allows to state the arithmetic precedence rules with only three words: "Punkt vor Strich", "dot before line". Also, seeing how for some students with ugly handwriting an x may well look like a + and vice versa, I hate to imagine what their formulas would look like if they were using a similar symbol also for multiplication … – celtschk Jan 17 '17 at 07:29

$\times$ is clearly visible.

$\cdot$ is more discrete and is often left implicit.

With maturity, we become able to supply the operator where required.

The x might be used for younger children instead of the ⋅ because they might confuse it with the . in decimals especially if the equation was handwritten. The x on the other hand can't be confused with a different symbol since they are probably not doing algebra. Just a thought. (just realized other people said this, sorry)

- 11

-

1Please, don't add answers that cover things already said in other posts. – egreg Feb 10 '18 at 23:13