I originally understood limits to be where functions run towards $\pm\infty$ as they approach some specific $x$ value and where they run towards (but never touch) some specific value (like $0$) as $x$ approaches infinity, thus making that value impossible to reach (a limit a function can't cross, in a Zeno's paradox-like way).

Now that I'm beginning to actually study calculus, I'm seeing that limits are somehow more broad. Specifically, I now see limits are always referred to in relation to some stated $x$ value being approached (as indicated by the conventional notation: $\lim_{x\to p} f(x)$). But, this makes it seem to me like you can pick any value (any $p$) you want, that the limit is simply whatever value the function approaches as $x$ approaches whatever value you decided to pick.

- Wouldn't that mean functions have an infinite number of limits? (You can find an infinite number of points on a line/curve, after all.)

- If so, what's so limiting about "limits" then?

- Also, wouldn't this make limits the most stupidly obvious things? For example: $f(x)=x^2$ will obviously approach $4$ as you pick $x$ values arbitrarily closer and closer to $2$ ($1.9, 1.99. 1.999, 1.9999, 1.99999,$ etc)?

- If functions don't have an infinite number of limits, than how do you recognize which values for $x$ to approach make sense?

Obviously, preconceived notions can screw with actually learning how a thing works because it can frame the information you're trying to integrate within a meaningless perspective, but figuring out how to shed those preconceived notions can be hard when you don't understand where you've gone wrong in the first place. ...oh, god, someone help me. I'm stuck in a loop.

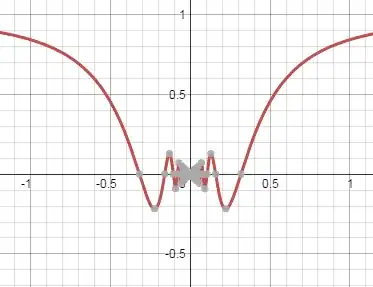

$\sin(1/x)$" />

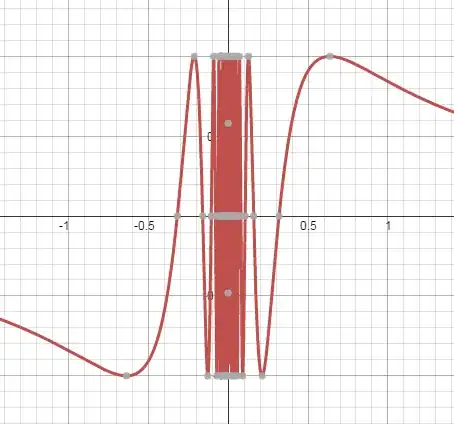

$\sin(1/x)$" />