I agree with the above answer of @Farewell.

Another aspect worth considering in my opinion is the inverse function theorem.

If a function with "derivative" $\pm \infty$ has an inverse, then in many cases the derivative of the inverse at the point will be $0$. (Basically we get a vertical line with a horizontal line when we switch the dependent and independent variables.)

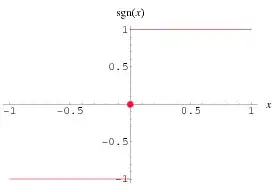

First let's try to consider some geometric problems associated with having "derivative" $\pm \infty$ of a one-dimensional function. At that point, the associated tangent line would clearly be vertical.

But therein lies a major issue: how can one consistently define the slope of a vertical line? One can't -- it's impossible because both $\infty$ and $-\infty$ will be equally reasonable choices -- this none-uniqueness problem doesn't happen for any other type of tangent line by the way.

Sure, in the case of $x^{-1/3}$ one could argue that "by continuity" the slope should be defined to be $+\infty$. But what about $\sqrt{x}$ and $-\sqrt{x}$? By continuity the derivative of one at 0 would be $+\infty$ and the other would have derivative $-\infty$ at 0, but both would correspond to the same tangent line of the curve $x=y^2$.

In more than one dimension, the geometric problems associated with trying to define an "infinite derivative" are even worse. Specifically, an "infinite derivative" would correspond to the non-existent inverse of a singular matrix, and there are literally uncountably many ways in which a matrix can be singular (i.e. not be invertible and have determinant zero), so any attempt to find a reasonably small number of "pseudoinverses" for all singular matrices would not be tractable.

(Moreover the space of invertible matrices has a nice property called "openness" which is similar to the idea of an open interval that non-invertible matrices simply do not have. Think of it this way: the set of real numbers which have well-defined reciprocals is $(-\infty,0) \cup (0,\infty)$ -- two open intervals, whereas the set of real numbers that don't have a well-defined reciprocal, $\{0\}$ is a point (points have the property of being "closed"). A similar situation exists in higher dimensions.)

In the proof of the inverse function (for a general number of dimensions, including $n=1$), we rely on the derivative being "non-zero" (in a generalized sense) in order to show that we can find a local inverse for the function centered at that point.

The proof doesn't go through when the derivative is "zero" because we can't define a unique value for the derivative of the local inverse function at that point (again, this is even true for $n=1$ as I mentioned above).

I'm not sure this is entirely satisfying though, because the aforementioned theorems can just be formulated with the additional proviso that the derivative is finite.

Perhaps there is some "deeper" reason we want derivative to be finite (or, maybe it's something not-so-deep which I'm not seeing). I have placed a bounty on this question.

– MathematicsStudent1122 Jun 02 '16 at 02:42In the theory of divergent integrals https://exnumbers.miraheze.org/wiki/Main_Page, the set of differentiable functions is expanded.

Specifically, in your example, the derivative of $f(x)=x^{1/3}$ at $x=0$ would be equal to

$$f'(0)=\frac{\omega _+^{5/3}-\omega _-^{5/3}}{3 \Gamma \left(\frac{8}{3}\right)}$$

where $\omega_+=\sum_{0}^\infty 1=\int_{-1/2}^\infty dx$ and $\omega_-=\omega_+-1=\sum_1^\infty 1=\int_{1/2}^\infty dx$ are two divergent integrals/series.

– Anixx Jul 21 '21 at 05:54