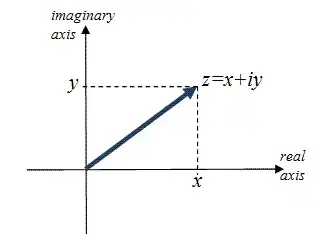

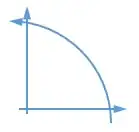

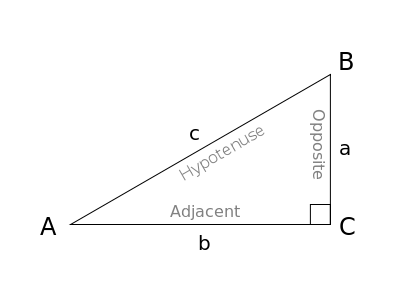

I guess that this relates mainly to the way we identify our coordinate axes. The first axis is the left-to-right $x$ axis. It follows our reading directions, so that consecutive values of a function plot can be read off in reading order. Then we have the $y$ axis perpendicular to that, in such a way that more means up. This is the secondary axis, and order matters here. The first quadrant is the region where both coordinates are positive (or non-negative, if you prefer), and it makes sense to associate that with $[0°,90°]$ as the canonical range of angles for a single quadrant. And in this range, the first endpoint $0°$ is associated with the first axis in $x$ direction, while the second endpoint $90°$ is associated with the second axis in $y$ direction.

There are of course conventions which differ from this. You mentioned the clock, which has $0°$ top and moves clockwise. As Yves Daoust (and a bit later Wumpus Q. Wumbley) pointed out in a comment, the motivation here was likely to mimick sundials, which (on the northern hemisphere) go clockwise as well. A wall-mounted sundial would likely have noon at the bottom, though, so that only explains the choice of zero-point if you consider midnight as the point of reference.

The main benefit in the clock-like scheme is that the special starting direction is vertical, which better fits our day-to-day experience where left and right depend a lot on where we stand, while up is a more universal concept. And this special up direction now serves as an axis of symmetry: changing the sign of the angle exchanges left and right, which is a more common thing than exchanging down and up at least for someone walking on the ground. Apparently reading was more of an influence than walking, though.

There also are areas in computer sciences where $x$ is to the right but $y$ goes downward. This provides a better match for our reading directions, characters in a row from left to right but rows on a page from top to bottom. In such a setup, angles would again be measured clockwise even as the zero direction remains right.

In the end, there is nothing to make one of these convenions more correct than the others, but mathematicians had to settle on one of them to avoid eternal confusion (as still arises with people using one of the other conventions), and at that time apparently reasons for the first choice were prevalent. It might have something to do with our language: if instead of “the value of $f$ at position $x$ is $y$” we were used to saying “we obtain $y$ as the value of $f$ at $x$”, then we might have considered the value axis the first one, and the parameter axis the second, and everything might have worked out differently. (Since most programming languages today use notation like y = f(x), we might be getting there.) Or if the people inventing function plots were more accustomed to reading tabular data from top to bottom, instead of text from left to right, we might have had parameters going that direction. We'll never know.